siggraph

siggraph

siggraph

siggraph

Emerging Technologies Presentations

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass Basic Conference Pass

Basic Conference Pass Experience Pass

Experience Pass Exhibitor Pass

Exhibitor Pass

Date/Time:

28 - 29 November 2017, 10:00am - 06:00pm

30 November 2017, 10:00am - 04:00pm

Venue: BHIRAJ Hall 3 - Experience Hall (Emerging Technologies Exhibits)

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

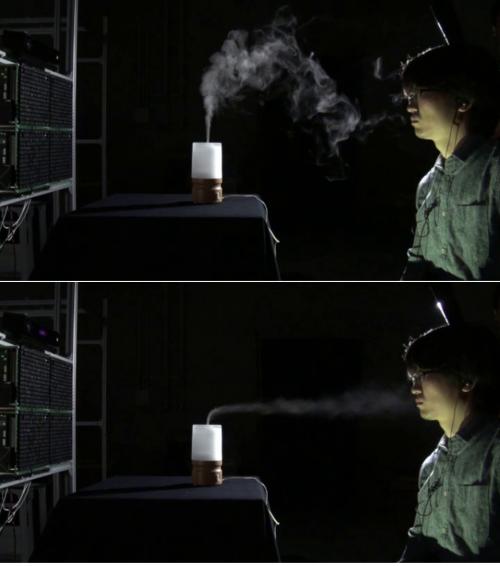

Interactive Midair Odor Control via Ultrasound-Driven Air Flow Booth no: et_0053

Summary: We propose a system for controlling aerial odor with ultrasound-driven

straight air flows. The proposed system contains ultrasound

transducers consolidated into a phased array so that the location

and the orientation of the resulting flow can be arbitrarily steerable.

CoVAR: A Collaborative Virtual and Augmented Reality System for Remote Collaboration Booth no: et_0011

Summary: We present CoVAR, a novel remote collaborative system using Augmented Reality (AR) and Virtual Reality (VR) technology supporting natural communication cues. CoVAR enables an AR user to capture and share their local environment with a remote user in VR to collaborate on spatial tasks in shared space. For our demonstration, environment capturing can be done in advance using Microsoft HoloLens within 15 minutes to reduce the computational load and to improve the overall user experience. CoVAR supports various interaction methods to enrich collaboration, including hand gestures, head gaze, and eye gaze input, and provides various collaboration cues to improve awareness on remote collaborator's status. We also demonstrate collaborative enhancements in VR user's body scaling and snapping to AR perspective. Thus, CoVAR system shows how AR and VR can be combined with natural communication cues to create new types of collaboration.

Demonstration of the Unphotogenic Light: Protection from Secret Photography by Small Cameras Booth no: et_0002

Summary: We present a new method to protect projected content from secret photography using high-speed projection. We aim to realize a protectable projection method that allows people to observe content with their eyes but not record content with camera devices.

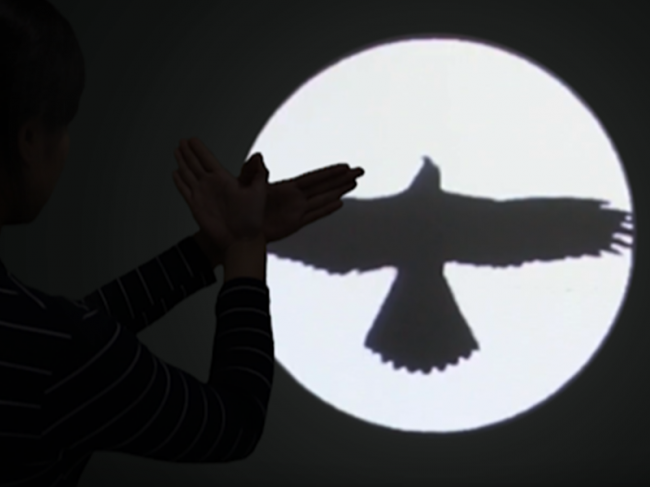

Enhanced Shadow Play with Neural Networks Booth no: et_0026

Summary: We present a shadow play system that displays in real-time the extrapolated motion sequences of the still shadow images casted by players. The crux of our system is a sequential version of the recently developed generative adversarial neural networks with a stack of LSTMs as the sequence controller. We train the model with preprocessed image sequences of diverse shadow fgures' motions, and use it in our enhanced shadow play system to automatically animate the shadows as if they were alive.

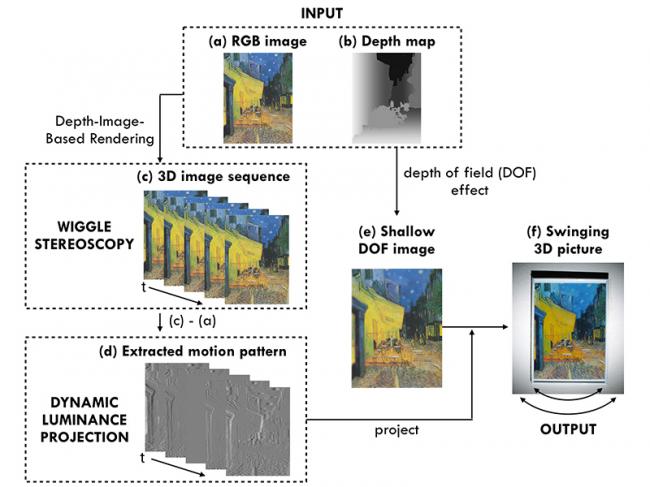

Swinging 3D Lamps: A Projection Technique to Create 3D Illusions on a Static 2D Image Booth no: et_0043

Summary: "Swinging 3D lamp" is a multiuser and naked-eye three-dimensional (3D) display technique using a common projector and printed media. This technique creates 3D optical illusions of motion parallax by superimposing dynamic luminance patterns on a static two-dimensional image in a real environment. The basic idea involves combining "wiggle stereoscopy," a method of creating 3D images by exploiting motion parallax, with "dynamic luminance projection," a projection technique making static images dynamic. However, in some cases, it does not work well when just combining these methods. This challenge was overcome by adding a depth-of-field effect on the original image. The proposed technique is useful for simple and eye-catching 3D displays in public spaces because of the fact that depth information can be presented on the common printed images and that multiple people can perceive the depth without special glasses or equipment.

Magic Table: Deformable Props Using Visuo Haptic Redirection Booth no: et_0008

Summary: We developed a novel virtual reality (VR) system that can transform objects using visuo-haptic redirected walking techniques. By using rotational manipulation and body warping techniques we can change the shape of objects. In the proposed system, it is possible to transform a square table into an equilateral triangle or a regular pentagonal table. Users can check the change in the shape by touching the edge of the table while walking around it.

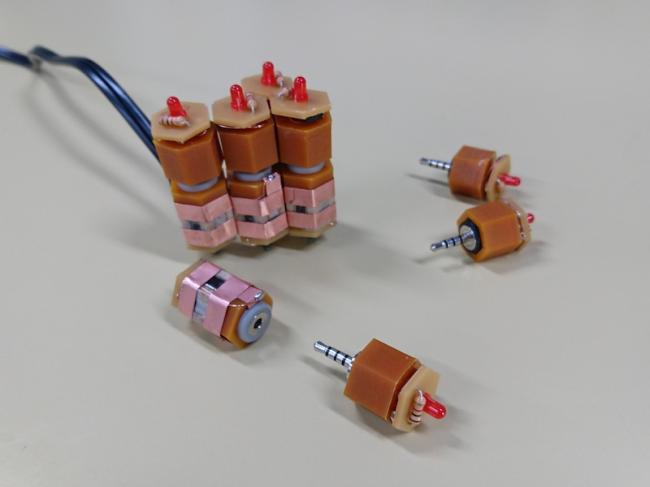

OptRod: Operating Multiple Various Actuators Simultaneously by Projected Images Booth no: et_0037

Summary: We propose OptRod, to operate multiple various actuators simultaneously by PC's projected images. A PC generates images as control signals and projects them to the bottom of OptRods by a projector. An OptRod receives the light and converts its brightness into a control signal for the attached actuator. By using multiple OptRods, the PC can simultaneously operate many actuators without any signal lines. Moreover, we can arrange various shape of surface easily by combining multiple OptRods. OptRod supports various functions by replacing the actuator unit connected to OptRod. We implemented a prototype of OptRod and evaluated the accuracy of communication by a projected image. As a result, it is revealed that the system can send information by 30 levels with 99.6% accuracy.

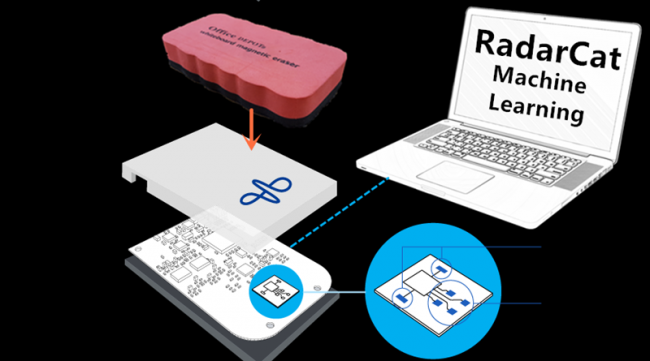

Tangible UI by Object and Material Classification with Radar Booth no: et_0019

Summary: Radar signals penetrate, scatter, absorb and reflect energy into proximate objects, indeed ground penetrating and aerial radar systems are well established. We describe a highly accurate system based on a combination of a monostatic radar (Google Soli), supervised machine learning to support object and material classification based UIs. Based on RadarCat techniques, we explore the development of tangible user interfaces without modification to the objects or complex infrastructures. This affords new forms of interaction with digital devices and proximate objects.

ThermoReality: Thermally Enriched Head Mounted Displays for Virtual Reality Booth no: et_0013

Summary: We present "ThermoReality", a system that can provide co-located thermal haptic feedback with head mounted displays. In the ThermoReality, we integrate five thermal modules (consisting of peltier elements) on the facial interface of a commercially available head mounted display. Each individual module is able to provide active heating or cooling sensations through an independently controlled thermal controller. In this manner, ThermoReality is able to provide visual images synchronized with co-located thermal haptic feedback on the user's face. Using this system, we are able to create thermal haptic cues such as immersive cues where all the modules are activated, moving cues where the modules are activated temporally to suit the visual content, or any mixed cues that are suitable for the displayed content. In this demonstration, we recreate activities such as opening a cold fridge, cooking at a stove and standing in front of an oscillating fan, with various thermal cues to enhance the user's immersive feeling.

HapBelt: Haptic Display for Presenting Vibrotactile and Force Sense using Belt Winding Mechanism Booth no: et_0022

Summary: We developed a haptic display that drives the skin via a lightweight belt with a DC motor. AC signals to the two DC motors generated vibration of the belt, and the vibration is directly presented to the skin in contact with the belt, while DC signals to the motors wind up the belt, and force sense is presented by causing skin deformation. By combining these two driving modes, high-fidelity vibration and directional force sensation can be presented simultaneously, with compact and low energy setups, which can be extended to various parts of the body.

3D Communication System using Slit Light Field Booth no: et_0006

Summary: By integrating a newly-developed light field camera and a slit-based light field display, we developed a 3D communication system, which can reduce information required to reproduce light rays of a remote space. Light field camera is some arranged CMOS cameras paired with each light array on a slit-based light field 3D display. An image captured by a camera is sampled and divided into lines in the vertical direction. The divided line of the image is presented by the corresponding light array. The line to be displayed is changed according to the direction of the rotating slit of the light array so that all the divided lines are reproduced correctly. This procedure reproduces light rays, which entered a camera, as if they come out from the actual objects. The cameras are installed and arranged in a row at the same interval the light arrays do. By using the slit-based light field 3D display and the light field camera with arranged CMOS cameras, it is possible to reproduce light rays at a distant space.

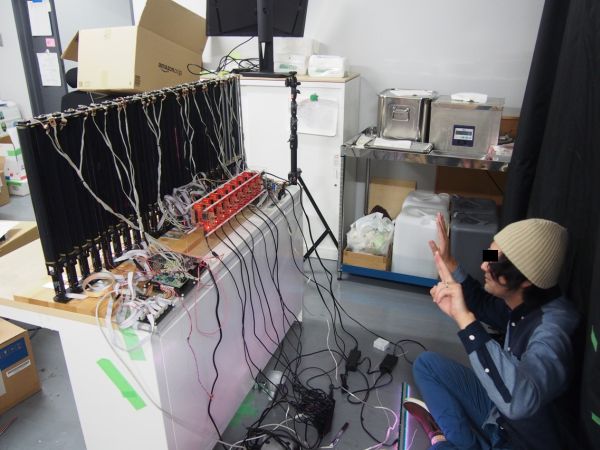

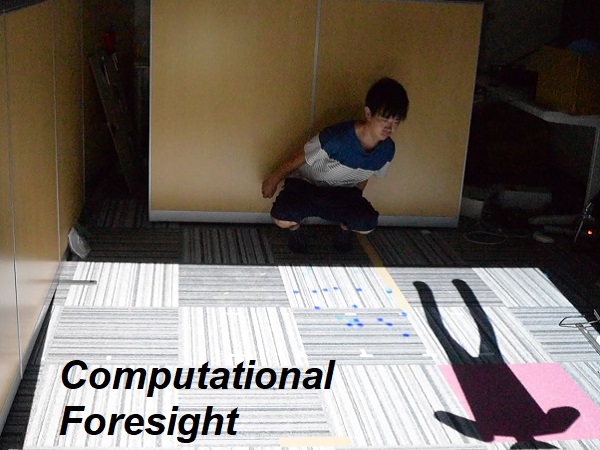

Computational Foresight: Realtime Forecast of Human Body Motion Booth no: et_0044

Summary: In this paper, we propose a machine learning-based system named "Comuputational Foresight" that can forecast body motion 0.5 seconds before. The system detects 25 human body joints by Kinect V2. We created 6-layered neural network system for machine-learning. We achieved real-time motion forecast system. This can be used to estimate human gesture inputs in advance, instruct sports actions properly, and prevent elderly from falling to the ground, and so on.

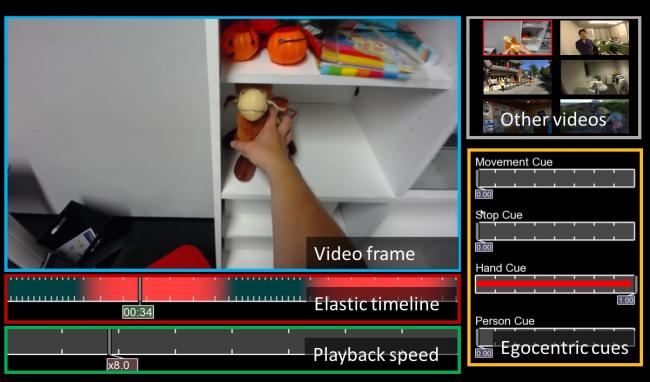

EgoScanning: Quickly Scanning First-Person Videos with Egocentric Elastic Timelines Booth no: et_0045

Summary: This work presents EgoScanning, a novel video fast-forwarding interface that helps users to find important events from lengthy first-person videos recorded with wearable cameras continuously. This interface is featured by an elastic timeline that adaptively changes playback speeds and emphasizes egocentric cues specific to first-person videos, such as hand manipulations, moving, and conversations with people, based on computer-vision techniques. The interface also allows users to input which of such cues are relevant to events of their interests.

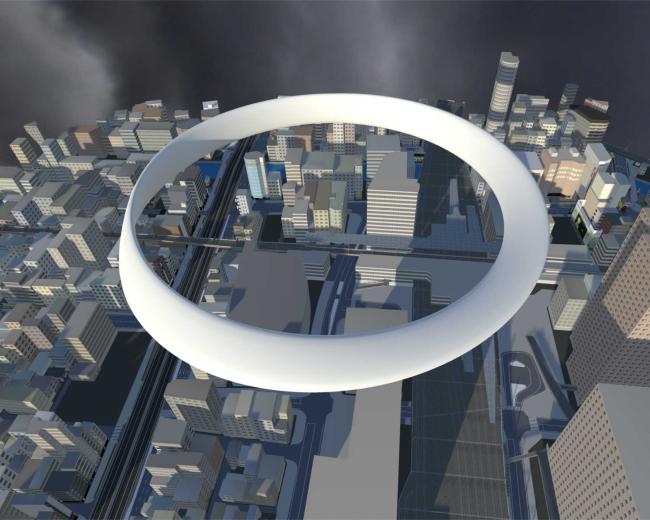

Mobius Walker: Pitch and Roll Redirected Walking Booth no: et_0049

Summary: A redirected walking (RDW) techniques enable users to walk around infinite virtual environments (VEs) in a finite physical space. In previous studies on RDW, many researchers have discussed manipulations in the yaw direction, but few have tackled with redirection in pitch and roll directions. We propose a novel VR system, which realizes pitch and roll redirections and allows users to experience walking on the 3D model of Mobius Strip in the VE.

Telewheelchair: A Demonstration of the Intelligent Electric Wheelchair System towards Human-Machine Booth no: et_0059

Summary: We propose the Intelligent Electric Wheelchair system that is able to provide care by combining telepresence and machine intelligence.

SharedSphere: MR Collaboration through Shared Live Panorama Booth no: et_0036

Summary: SharedSphere, a Mixed Reality remote collaboration system which not only allows sharing of a live captured immersive panorama, but also allows enriched collaboration through sharing non-verbal communication cues, such as view awareness and gestures.