siggraph

siggraph

siggraph

siggraph

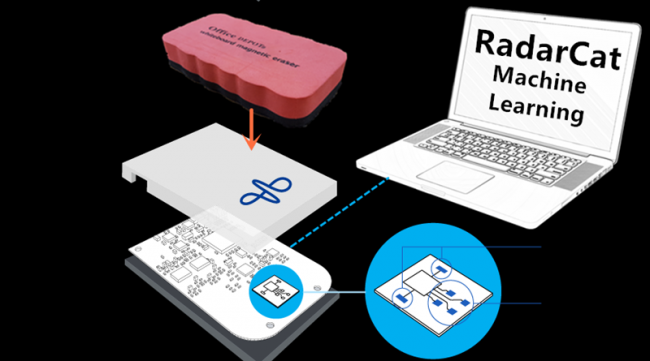

Tangible UI by Object and Material Classification with Radar Booth no: et_0019

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass Basic Conference Pass

Basic Conference Pass Experience Pass

Experience Pass Exhibitor Pass

Exhibitor Pass

Date/Time:

28 - 29 November 2017, 10:00am - 06:00pm

30 November 2017, 10:00am - 04:00pm

Venue: BHIRAJ Hall 3 - Experience Hall (Emerging Technologies Exhibits)

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Summary: Radar signals penetrate, scatter, absorb and reflect energy into proximate objects, indeed ground penetrating and aerial radar systems are well established. We describe a highly accurate system based on a combination of a monostatic radar (Google Soli), supervised machine learning to support object and material classification based UIs. Based on RadarCat techniques, we explore the development of tangible user interfaces without modification to the objects or complex infrastructures. This affords new forms of interaction with digital devices and proximate objects.

Presenter(s):

Hui-Shyong Yeo, University of St Andrews

Barrett Ens, University of South Australia

Aaron Quigley, University of St Andrews