siggraph

siggraph

siggraph

siggraph

Posters Exhibits

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass Basic Conference Pass

Basic Conference Pass Experience Pass

Experience Pass Visitor Pass

Visitor Pass Exhibitor Pass

Exhibitor Pass

Date/Time: 27 - 30 November 2017, 09:00am - 06:00pm

Venue: Foyer of BHIRAJ Hall 1-3

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

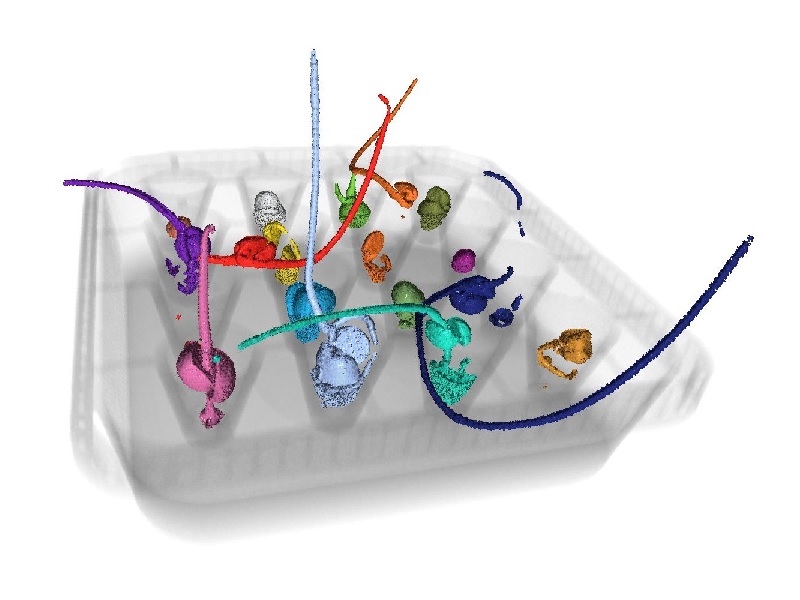

4D Computed Tomography Measurement for Growing Plant Animation (Topic: Animation)

Summary: This study presents a technique to generate growing plant animations by scanning a target plant using X-ray CT over the course of days to obtain a 4D-CT image and present an algorithm to segment it semi-automatically.

Author(s): Sakiho Kato, Keio University

Tomofumi Narita, Shibaura Institute of Technology

Chika Tomiyama, Shibaura Institute of Technology

Takashi Ijiri, Keio University, Shibaura Institute of Technology

Hiroya Tanaka, Keio Univesity

Speaker(s): Sakiho Kato, Keio University

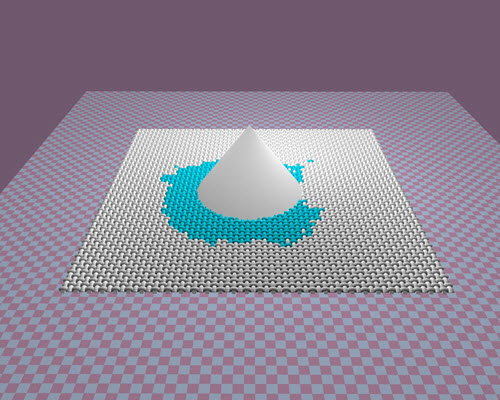

Liquid Wetting across Porous Anisotropic Textiles (Topic: Animation)

Summary: This paper presents a new simulation framework for liquid wetting across porous textiles with an-isotropic inner structure. The influence of the textile's properties on the wetting process, such as contact angle, hygroscopicity and porosity, is considered into the liquid wetting process in detail. By liquid-textile coupling, the wetting process is simulated through liquid absorption/desorption by fiber and liquid diffusion in the means of inner fiber, intersected fibers and capillary action. This framework can simulate the liquid wetting across the porous an-isotropic textile by dripping single or multiple drops of water with realistic results.

Author(s): Aihua Mao, School of Computer Science & Engineering, South China University of Technology, School of Computer Science & Engineering, South China University of Technology

Speaker(s): Aihua Mao, School of Computer Science & Engineering, South China University of Technology

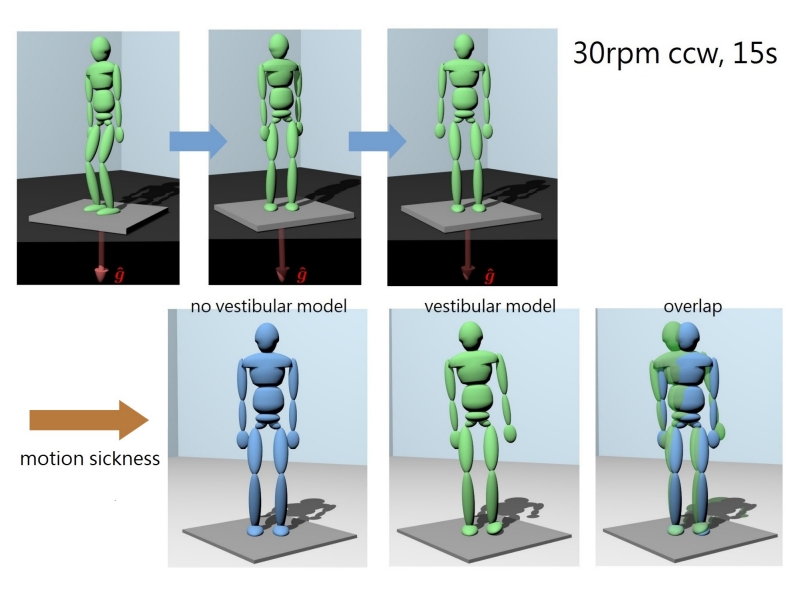

Motion Sickness Simulation Based on Sensorimotor Control (Topic: Animation)

Summary: We propose a general framework for sensorimotor control, integrating a balance controller and a vestibular model, to generate motion sickness, perception-aware motions.

Author(s): Chen-Hui Hu, National Chiao Tung University, Taiwan

Ping Chen, National Chiao Tung University, Taiwan

Yan-Ting Liu, National Chiao Tung University, Taiwan

Wen-Chieh Lin, National Chiao Tung University, Taiwan

Speaker(s): Chen-Hui Hu, National Chiao Tung University, Taiwan

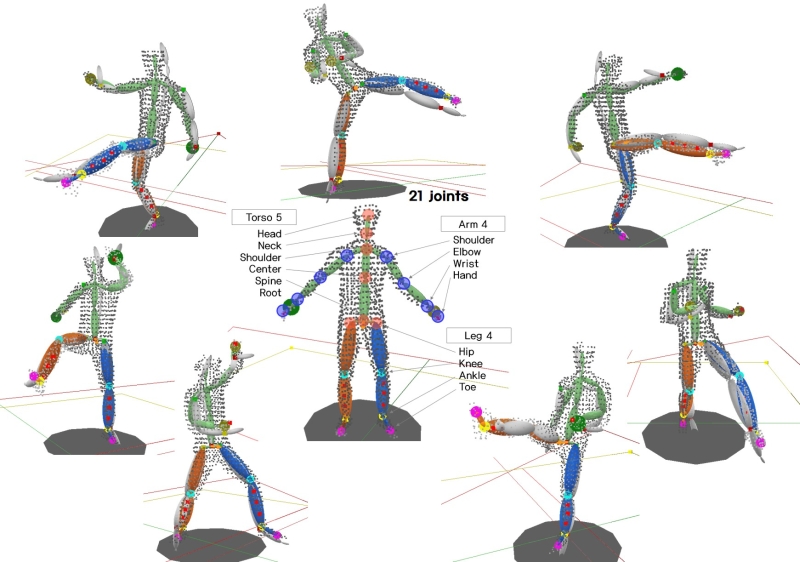

User Pose Estimation based on Multiple Depth Sensors (Topic: Animation)

Summary: Despite the diverse application of motion capture technology, it is challenging to capture people's motions unless they are wearing relevant equipment. This paper proposes a method of estimating the joint positions based on depth data as well as optimal joint selection in restoring the pose with multiple Kinect sensors. The proposed method enhances the accuracy of pose restoration, enables real-time capture of dynamic motions such as Taekwondo and applies to training programs for the general public

Author(s): Seongmin Baek, Electronics and Telecommunications Research Institute

Myunggyu Kim, Electronics and Telecommunications Research Institute

Speaker(s): Seongmin Baek, ETRI

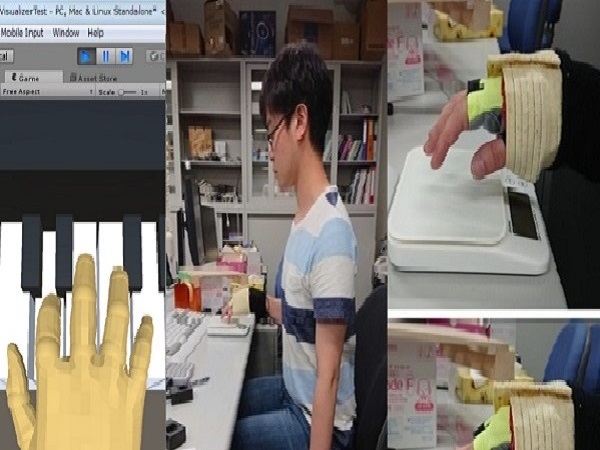

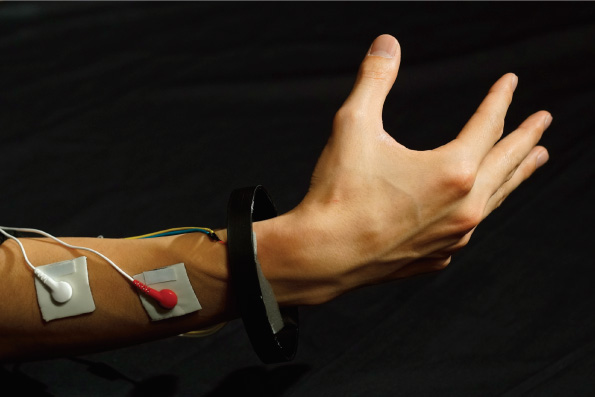

A Proposal for Wearable Controller Device and Finger Gesture Recognition using Surface Electromyography (Topic: Hardware)

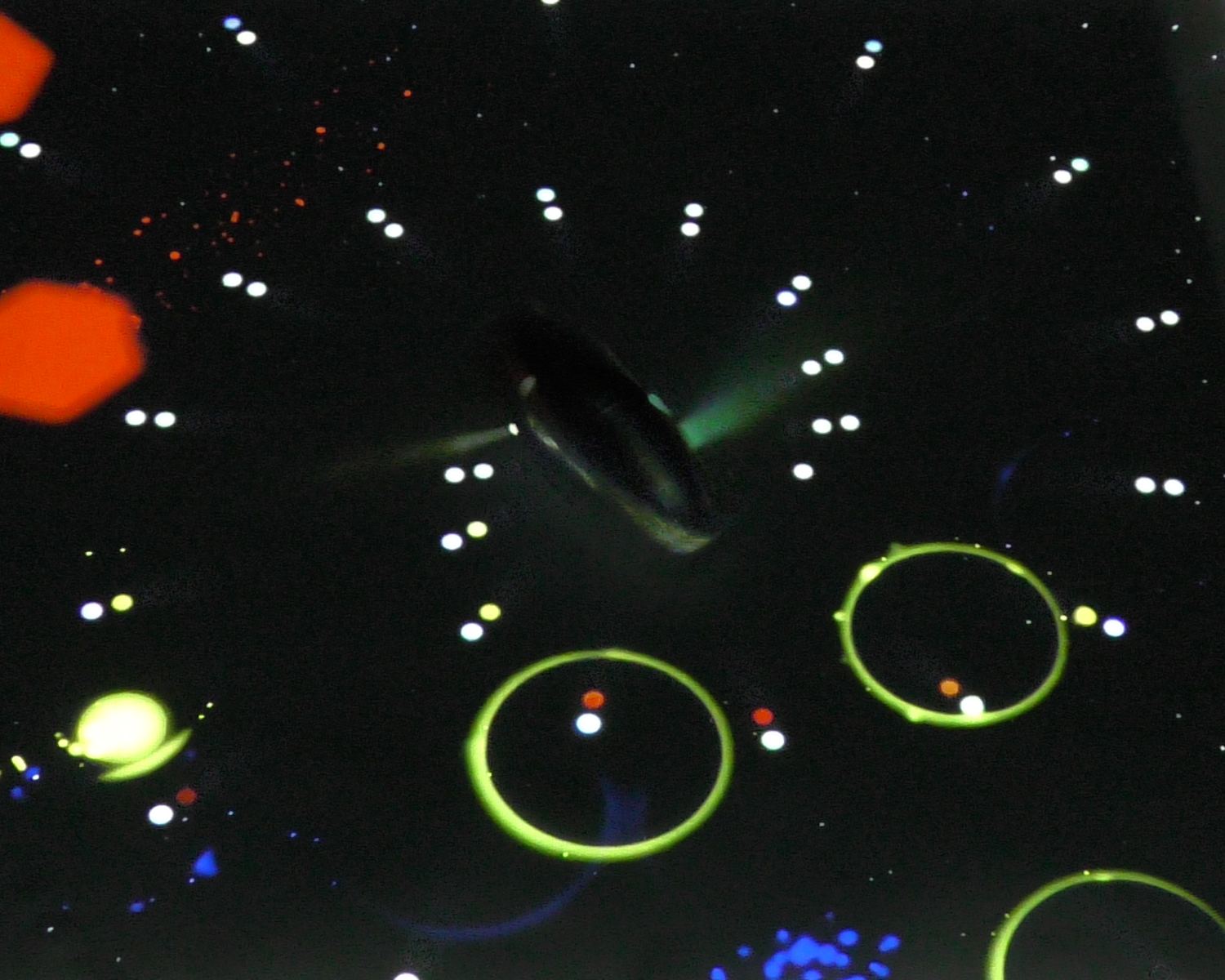

Summary: Hand and finger motion is very complicated and achieved by intertwining forearm part (extrinsic) and finger part (intrinsic) muscles. We created a wearable finger-less glove controller using dry electrodes of sEMG(surface Electromyography) and only intrinsic hand muscles were sensed. Our wearable interface device is easy to wear and light-weighted. In offline analysis, we identified the tapping motion of fingers using the wearable glove. Totally eleven features were extracted, and linear discriminant analysis (LDA) was used as a classifier. The average of the discrimination result of intersubject analysis was 88.61±3.61%. In online analysis, we created a demo that reflects actual movement in the virtual space by Unity. Our demo showed a prediction of finger motions, and realized the motions in the virtual space.

Author(s): Ayumu Tsuboi, Waseda University

Mamoru Hirota, waseda university

Junki Sato, Waseda University

Speaker(s): Ayumu Tsuboi Mamoru Hirota Junki Sato, Waseda University

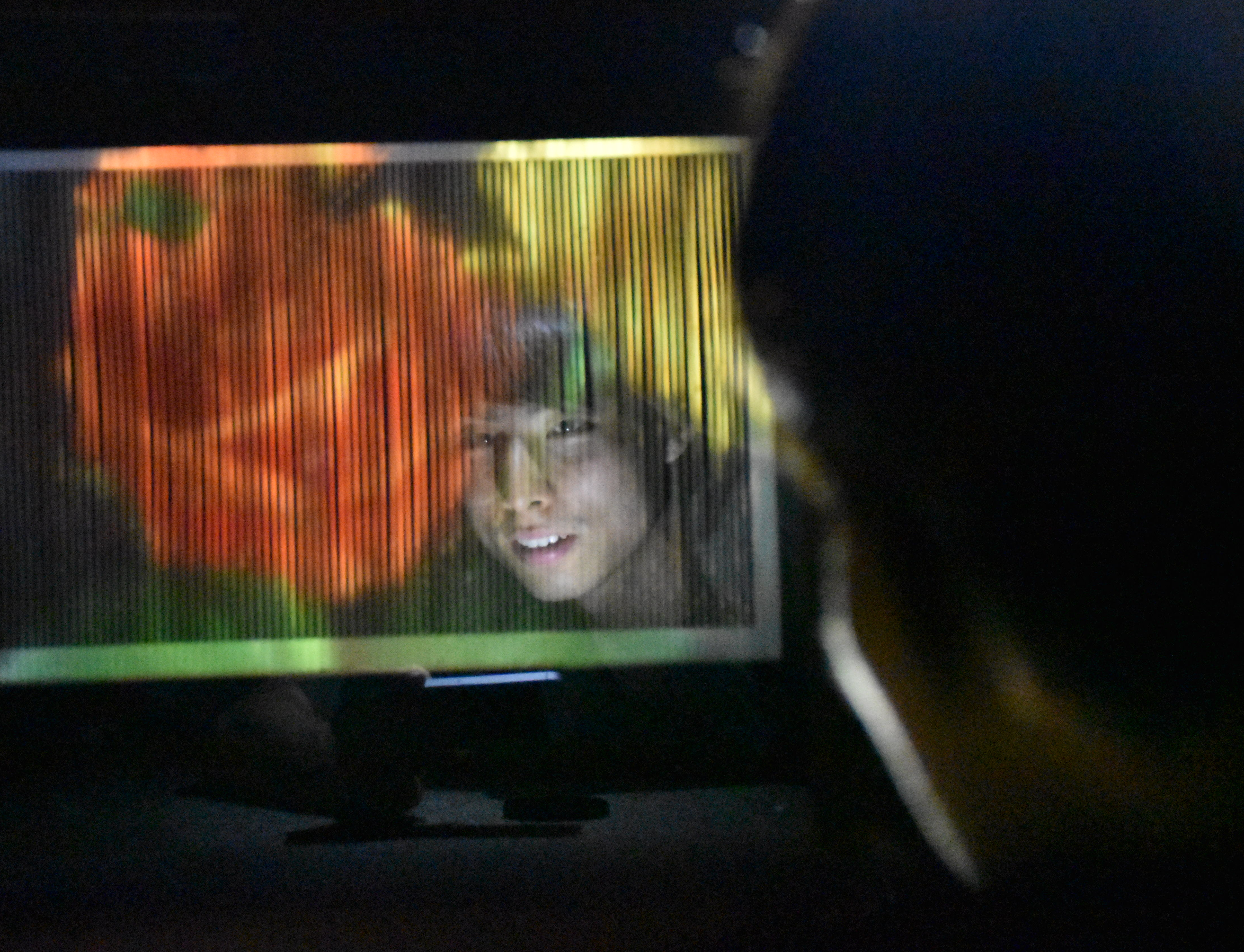

Aerial Image on Retroreflective Particles (Topic: Hardware)

Summary: We present a new method to render aerial images using the retroreflective particles. Transmissive particles coded by reflective films on half-side behave as retroreflective material. In our method, we place the retroreflective particles in the air. Falling retroreflective particles play a role as a diffuser like a fog screen. By passing the incident light through a plurality of retroreflective particles facing various directions in the air, our display enables to have a wide viewing angle. The result showed that when the 60 degree angle was shifted from the position showing the highest luminance, the luminance at that angle was about 54% of the highest luminance. In addition to the wide viewing angle, the particle size enables the images on this display to be sharp. This system has an advantage that the image can be observed without facing the light source.

Author(s): Shinnosuke Ando, University of Tsukuba

Kazuki Otao, University of Tsukuba

Speaker(s): Shinnosuke Ando Kazuki Otao Kazuki Takazawa Yusuke Tanemura Yoichi Ochiai, University of Tsukuba

Aerial Light-Field Image Augmented Between You and Your Mirrored Image (Topic: Hardware)

Summary: This paper proposes a method to form an aerial light-field image in front of a mirror. Our system consists of a specially fabricated punctured retro-reflector covered with a quarter-wave retarder and a reflective polarizer. The floating aerial 3D image is augmented between you and your mirrored image.

Author(s): Tomofumi Kobori, Utsunomiya University

Tomoyuki Okamoto, Utsunomiya University

Sho Onose, Utsunomiya University

Kazuki Shimose, Utsunomiya University

Masao Nakajima, Nikon Corporation

Toru Iwane, Nikon Corporation

Hirotsugu Yamamoto, Utsunomiya University

Speaker(s): Hirotsugu Yamamoto, Utsunomiya University

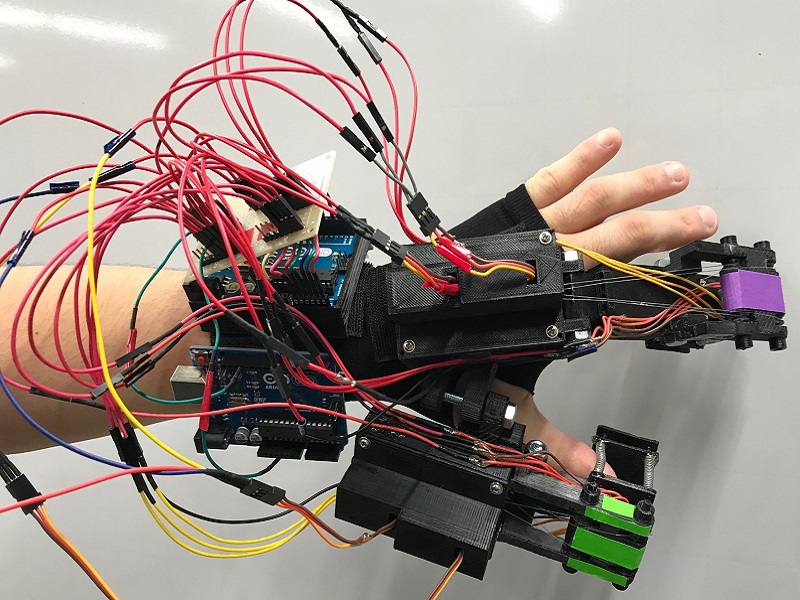

Improvement of A Finger-Mounted Haptic Device Using Surface Contact (Topic: Hardware)

Summary: Recently, several researches of haptic device have been conducted. Haptic devices provide users with a perception of touching an object, such as CG by a force feedback. Since they provide a force feedback from a single point on an object surface where users touched, users touch an object by point contact. However, they cannot provide a sense such as humans touching an object with a finger pad because humans do not touch an object by point contact but surface contact. We focused on this characteristic and developed a surface contact haptic device. To touch a CG object in surface contact, we use a plane interface. It provides a force feedback by being approximated to tangent plane on a CG object surface where users touched and the sense of grabbing a CG object with finger pads.

Author(s): Makoto Yoda, Graduate School of Soka University, Tokyo Japan

Hiroki Imamura, Graduate School of Soka University, Tokyo Japan

Speaker(s): Makoto Yoda Hiroki Imamura, Department of Information System Science, Graduate School of Engineering, Soka university

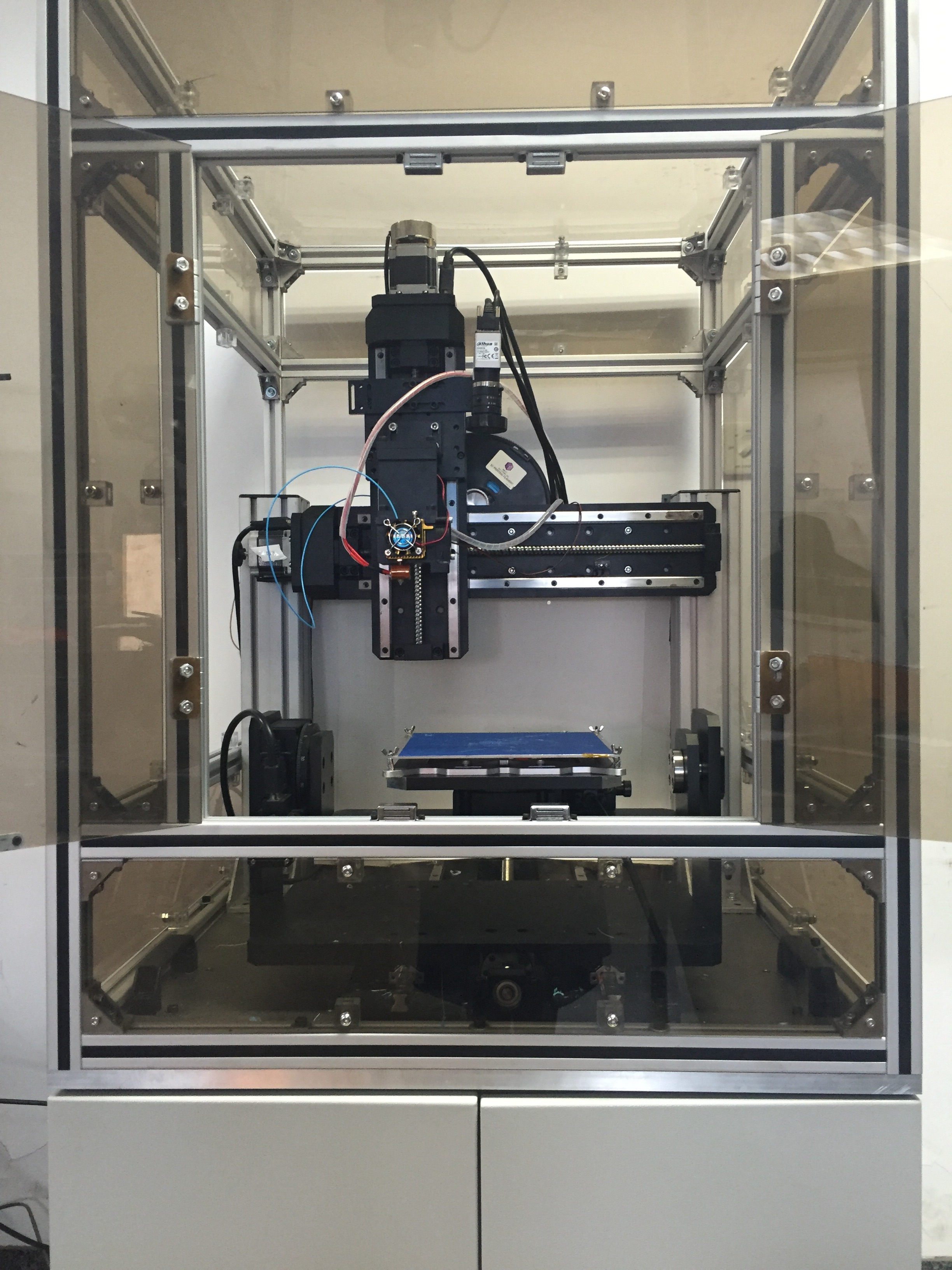

Multi-DOF 3D Printing with Visual Surveillance (Topic: Hardware)

Summary: In this poster, we propose a multi-DOF (degree of freedom) 3D printing system with visual surveillance assistance. Different from common 3D printers with only three translational axes, our printer is equipped with two additional rotational axes, and a visual surveillance module which helps to enhance the printing precision during the rotation. The input model is first segmented such that each part can be printed in a direction with less supporting material. We demonstrate that the proposed system could save most of the supporting materials compared to existing works.

Author(s): Lifang Wu, Beijing University of Technology

Miao Yu, Beijing University of Technology

Yisong Gao, Beijing University of Technology

Dong-Ming Yan, NLPR, Institute of Automation, Chinese Academy of Sciences

Ligang Liu, The School of Mathematical Sciences, University of Science and Technology of China, Hefei, China

Speaker(s): Lifang Wu, Beijing University of Technology

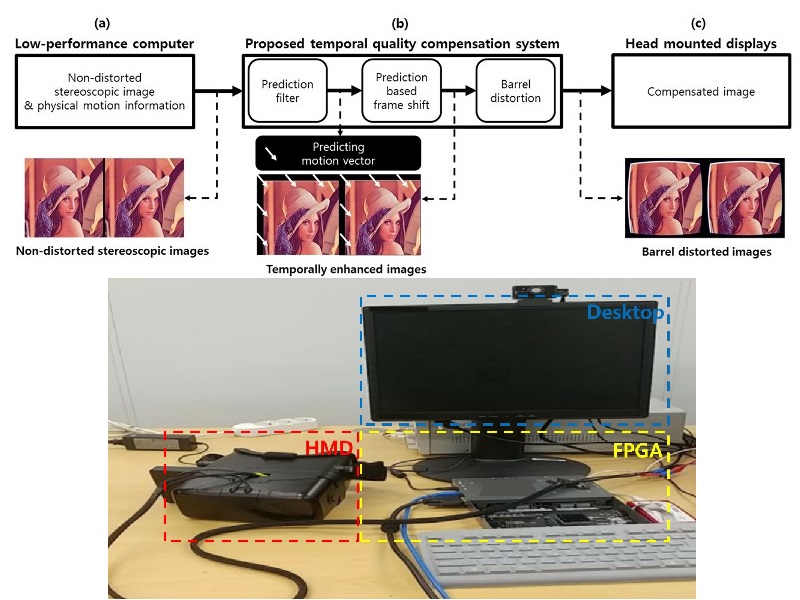

Real-time Temporal Quality Compensation Technique for Head Mounted Displays (Topic: Hardware)

Summary: An asynchronous time warp (ATW) is used to reduce a motion-to-photon latency in head mounted displays (HMDs). To implement the ATW, a graphics processing unit (GPU) must allow to perform the time warp during the image rendering. However, typical GPUs do not support the preemption optimized for the ATW. Even though the preemption is performed in the GPUs, visual artifcat like a judder can occur. Therefore, high priced GPUs with high performance optimized for the ATW must be used to enhance the temporal image quality. In this paper, a new field-prgrammable gate array (FPGA) based HMD system is proposed to perform the temporal quality compensation without using ATW. The proposed system can enhance the perceived image quality in the HMD even when using the typical GPUs with low performance. We develop the hardware system on the low priced FPGA handling output images with the 1440x2560 pixel resolution. Experimental results show that the average PSNR of our system is 30.15dB for the temporal image quality and the total execution time of this system is 1.48 ms. Thus, our system can be implmented in real-time with the improved temproal image quality.

Author(s): Jung-Woo Chang, Sogang University

Suk-Ju Kang, Sogang University

Min-Woo Seo, Sogang University

Song-Woo Choi, Sogang University

Sang-Lyn Lee, LG Display

Ho-Chul Lee, LG Display

Eui-Yeol Oh, LG Display

Jong-Sang Baek, LG Display

Speaker(s): Jung-Woo Chang, Sogang University

A Deep Convolutional Neural Network for Continuous Zoom with Dual Cameras (Topic: Imaging and Video)

Summary: This paper proposes a convolutional neural network for synthesizing continuous zoom from a pair of images captured by two nearby cameras with two fixed focal lengths. The network is composed of two sub-nets. The first one estimates the disparity map between input images so that they can be aligned. The second sub-net takes the aligned image pair for boosting the image resolution for simulating continuous zoom. We have built a prototype camera system and trained a network for the task. Experiments show that the proposed method can fuse the two captured images effectively and enhance the image resolution.

Author(s): Hsueh-I Chen, National Taiwan University

Claire Chen, National Taiwan University

Yung-Yu Chuang, National Taiwan University

Speaker(s): Hsueh-I Chen, National Taiwan University

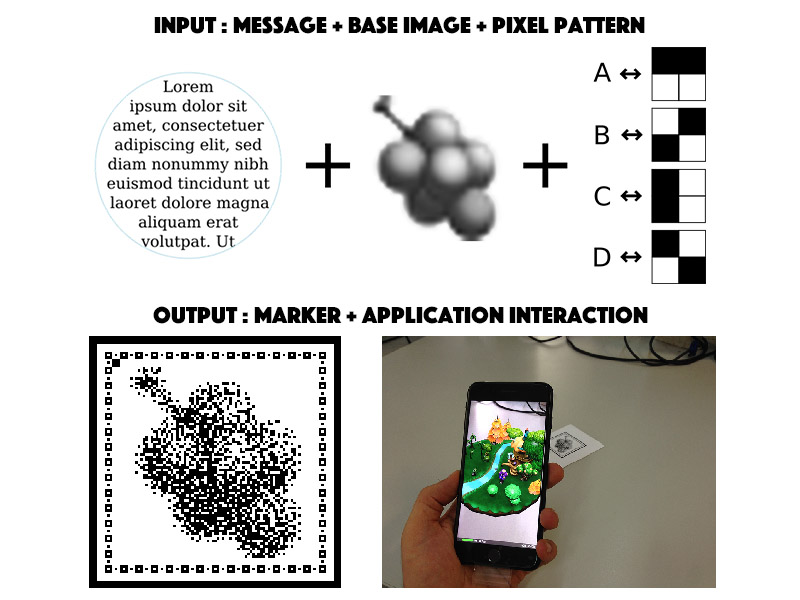

An Application of Halftone Pattern Coding in Augmented Reality (Topic: Imaging and Video)

Summary: This work introduces a technique to compose markers containing information that can be retrieved a posteriori from a photo. They are an appropriate combination of black and white pixels in such way that some group of pixels are related to symbols of the coded message, and the others are used to yield the aesthetic of marker. These markers can be used for many purposes, including augmented reality applications. They can be easily detected in a photo and the encoded information is the basis for parameterizing several types of augmented reality applications.

Author(s): Bruno Patrão, Institute of Systems and Robotics - University of Coimbra

Leandro Cruz, Institute of Systems and Robotics - University of Coimbra

Nuno Gonçalves, Institute of Systems and Robotics - University of Coimbra

Speaker(s): Bruno Patrão Leandro Cruz Nuno Gonçalves, Institute of Systems and Robotics - University of Coimbra

Estimating the Simulator Sickness in Immersive Virtual Reality with Optical Flow Analysis (Topic: Imaging and Video)

Summary: In this paper, we conduct an user study to understand the relationship between the optical flow and simulator sickness. We record videos from head-mounted display(HMD) while users are moving around in VR, and then we ask them to take a simulator sickness questionnaire(SSQ) after the experience. Finally, we analyze the optical flow and estimate the correlation with SSQ.

Author(s): Juin-Yu Lee, National Taiwan university

Ping-Hsuan Han, National Taiwan university

Ling Tsai, National Taiwan university

Jih-Ting Peng, National Taiwan university

Yang-Sheng Chen, National Taiwan university

Yi-Ping Hung, National Taiwan university

Speaker(s): Jiun-Yu Lee, National Taiwan University

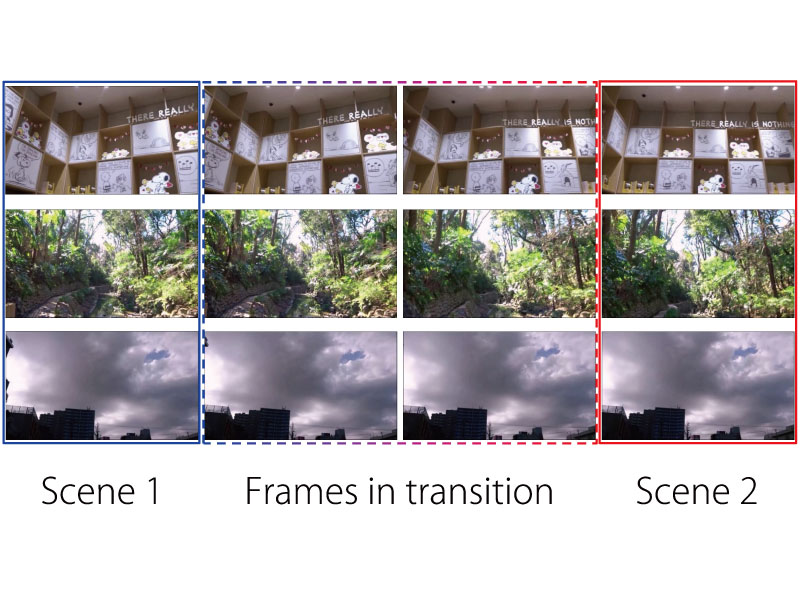

Seamless Video Scene Transition Using Hierarchical Graph Cuts (Topic: Imaging and Video)

Summary: A video clip consists of many scenes and shots. An editor manually cuts and pastes those scenes, placing them in order to compose the clip. This process is one of the most common in video editing. For scene transitions, there are many transition effects available in video editing software. Though transition effects emphasize the transition, there are few effects that seamlessly switch scenes and shots. Here we propose a seamless video scene transition method, which, if available to editors, will provide them with the ability to place the scenes without worrying about transitions. Moreover, one good scene can be composed by seamlessly connecting multiple bad takes using the seamless transition. The main factor that makes a scene boundary visible is the discontinuity between adjacent pixels during a scene change. If these boundary pixel values are similar, scene transition will be less noticeable. We define the difference of pixel values at this boundary as the connection cost. Our method parses the pixels at the scene boundary seams that minimize the connection cost using hierarchical graph cuts.

Author(s): Tatsunori Hirai, Komazawa University

Speaker(s): Tatsunori Hirai, Komazawa University

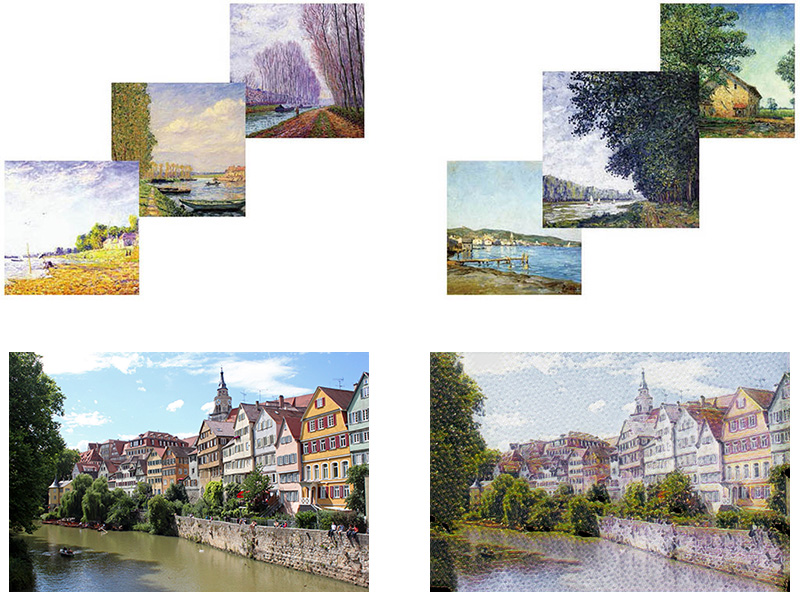

Style-Oriented Representative Paintings Selection (Topic: Imaging and Video)

Summary: In this work, we proposed a novel method to select representative paintings of an artist. Different from traditional clustering problems, we don't try to assign each image a correct label. We focus on finding the most representative ones in all the paintings. We first use K-means to preliminary cluster an artist's paintings. Clustering centres are the original representative images. Then we employ rejection to pick out the unrepresentative and confused samples. Finally, we update the K classes and get the new representative images.

Author(s): Yingying Deng, Institute of Automation, Chinese Academy of Sciences

Fan Tang, Institute of Automation, Chinese Academy of Sciences

Weiming Dong, Institute of Automation, Chinese Academy of Sciences

Hanxing Yao, LLVISION

Bao-Gang Hu, Institute of Automation, Chinese Academy of Sciences

Speaker(s): Yingying Deng, NLPR, Institute of Automation, Chinese Academy of Sciences

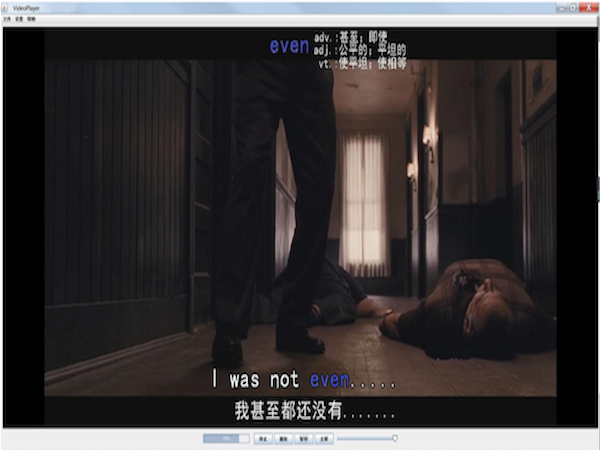

Subtitle Positioning for E-learning Videos Based on Rough Gaze Estimation and Saliency Detection (Topic: Imaging and Video)

Summary: Subtitle is very common shown in a variety categories of videos, especially useful as translated subtitles for native speakers. Traditional subtitle is placed at the bottom of videos in order to prevent from occluding essential video contents. However, traversing between important video contents and subtitle frequently will have a negative impact on focusing watching video itself. Recently, some research work try more flexible subtitle positioning strategy. However, these methods are effective with restrictions on the video content and devices adopted. In this work, we propose a novel subtitle content organization and placement framework based on rough gaze estimation and saliency detection.

Author(s): Bo Jiang, Nanjing University of Posts and Telecommunications

Sijiang Liu, Nanjing University of Posts and Telecommunications

Liping He, Nanjing University of Posts and Telecommunications

Weimin Wu, Nanjing University of Posts and Telecommunications

Hongli Chen, Nanjing University of Posts and Telecommunications

Yunfei Shen, Nanjing University of Posts and Telecommunications

Speaker(s): Bo Jiang, Nanjing University of Posts and Telecommunications

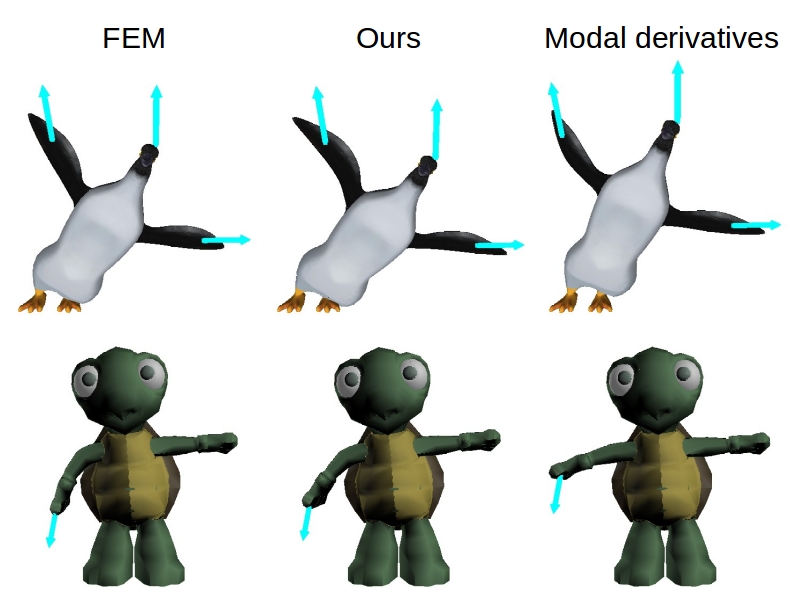

Deformation Simulation Based on Model Reduction with Rigidity Sampling (Topic: Interaction)

Summary: We propose rigidity guided sampling to select more compact sample points that can be utilized to obtain more physically-correct bases for the subspace of spatio-temporal elastic deformations, so that the run-time simulation accuracy is increased when the external forces are applied to the objects.

Author(s): Shuo-Ting Chien, National Chiao Tung University, Taiwan

Chen-Hui Hu, National Chiao Tung University, Taiwan

Cheng-Yang Huang, National Chiao Tung University, Taiwan

Yu-Ting Tsai, Yuan Ze University

Wen-Chieh Lin, National Chiao Tung University, Taiwan

Speaker(s): Chen-Hui Hu, National Chiao Tung University, Taiwan

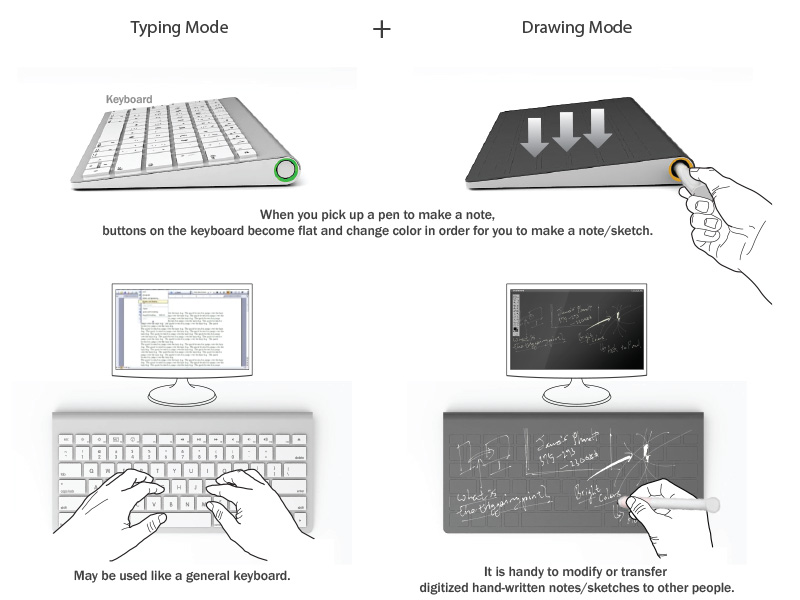

Dualboard : Integrated User Interface between Typing and Handwriting (Topic: Interaction)

Summary: Currently, a variety of digital devices and associated input interfaces are being introduced. However, when you need to write down something important quickly and precisely, you may use a traditional input such as a pen. For example, while using digital devices, you may still need to find a notepad and a pen, which can be an inconvenience if you need to store and modify something that you've written down. This concept provides an integrated user interface where it is possible to continuously and naturally switch between typing and handwriting while performing tasks on the computer, one of the most representative digital devices.

Author(s): Seunghyun Woo, Hyundai Motor Company

Speaker(s): Seunghyun Woo, Hyundai Motor Company

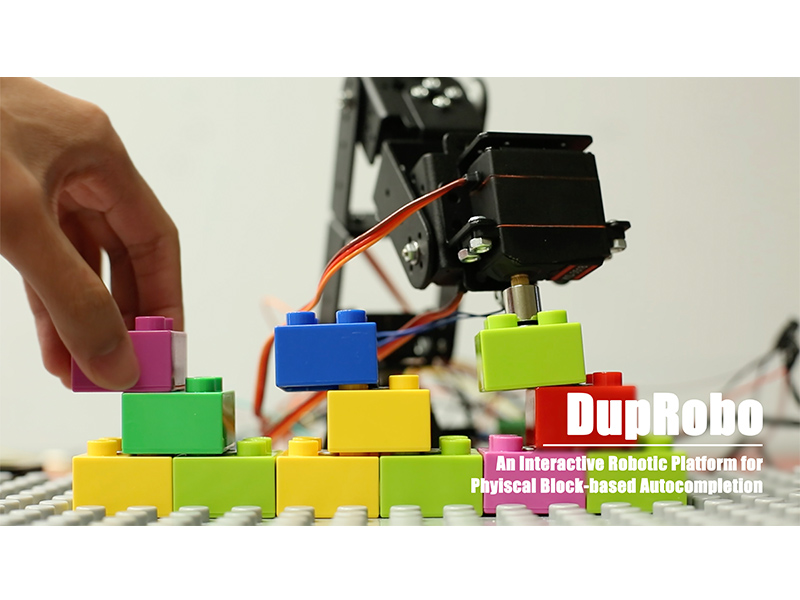

DupRobo: An Interactive Robotic Platform for Physical Block-based Autocompletion (Topic: Interaction)

Summary: In this paper, we presents DupRobo, an interactive robotic platform for tangible block-based design and construction. Inspired by the drawing autocompletion interfaces, DupRobo supported user-customisable exemplar, repetition control, and tangible autocompletion, through the computer-vision and the robotic techniques. With DupRobo, we aim to reduce users' workload in repetitive block-based construction, yet preserve the direct manipulability and the intuitiveness in tangible model design.

Author(s): Taizhou Chen, School of Creative Media, City University of Hong Kong

Kening Zhu, School of Creative Media, City University of Hong Kong

Baochuan Yue, School of Creative Media, City University of Hong Kong

Feng Han, School of Creative Media, City University of Hong Kong

Yi-Shiun Wu, School of Creative Media, City University of Hong Kong

Speaker(s): Taizhou Chen, School of Creative Media, City University of Hong Kong

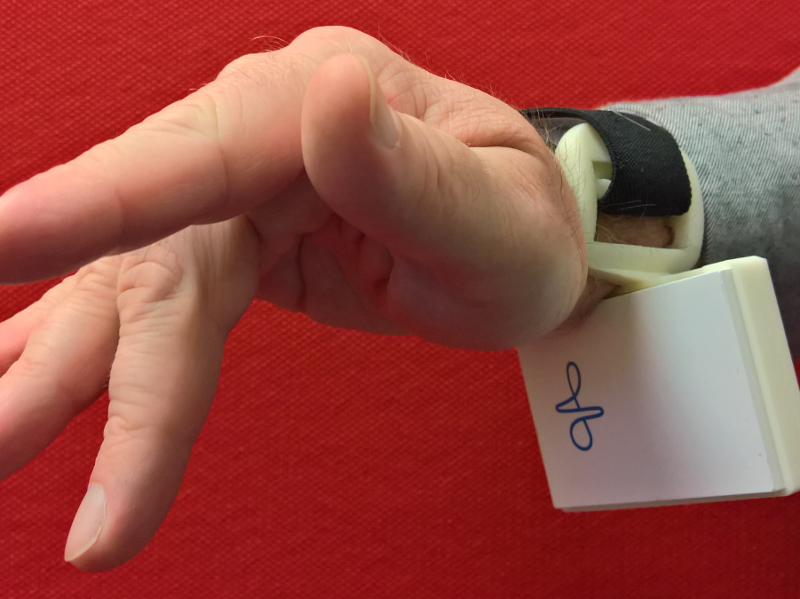

Exploring Mixed-Scale Gesture Interaction (Topic: Interaction)

Summary: This paper presents ongoing work toward a design exploration for combining microgestures with other types of gestures within the greater lexicon of gestures for computer interaction. We describe three prototype applications that show various facets of this multi-dimensional design space. These applications portray various tasks on a Hololens Augmented Reality display, using different combinations of wearable sensors.

Author(s): Barrett Ens, University of South Australia

Aaron Quigley, University of St. Andrews

Hui-Shyong Yeo, University of St. Andrews

Pourang Irani, University of Manitoba

Thammathip Piumsomboon, University of South Australia

Mark Billinghurst, University of South Australia

Speaker(s): Barrett Ens, University of South Australia

MistFlow: A Fog Display for Visualization of Adaptive Shape-Changing Flow (Topic: Interaction)

Summary: We propose an interactive fog display which pseudo-synchronized image contents with the deformation generated by users. In the proposed method, a sense of natural synchronization between the shape-changing screen and the projected image is provided by using hand gesture detection and a physical simulation of collision between falling particles and user's hands.

Author(s): Otao Kazuki, University of Tsukuba

Takanori Koga, National Institute of Technology, Tokuyama College

Speaker(s): Kazuki Otao, University of Tsukuba

Natural Interaction for Media Consumption in VR Environment (Topic: Interaction)

Summary: This research suggests a set of natural interactions for VR, which enables a user to interact in VR environment without any controller in hands by using their eye-gaze, gesture and voice only, and show its effectiveness by comparing it with conventional VR interactions that utilize remote controllers.

Author(s): Seung Hwan Choi, SAMSUNG Electronics Co., Ltd.

Hyunjin Kim, SAMSUNG Electronics Co., Ltd.

Jaeyoung Lee, SAMSUNG Electronics Co., Ltd.

Sangwoong Hwang, SAMSUNG Electronics Co., Ltd.

Speaker(s): Seung-Hwan Choi, Samsung Electronics

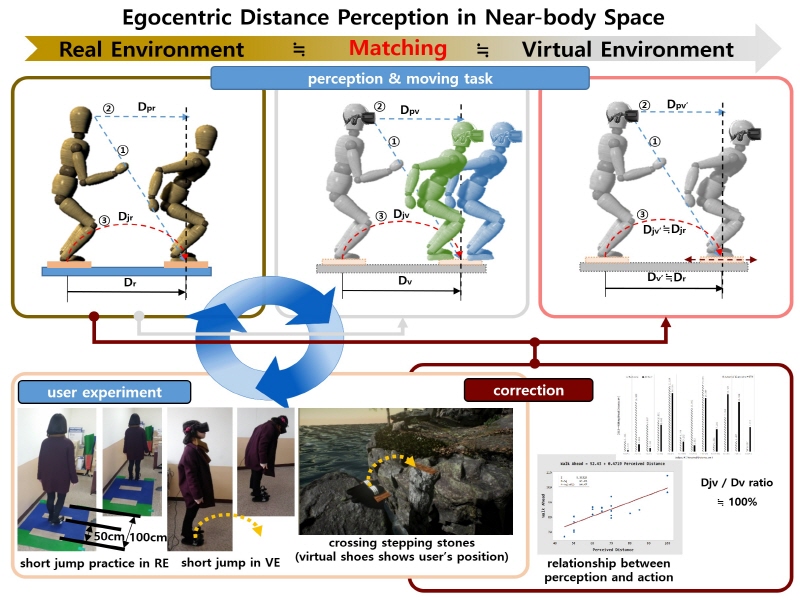

Perception Adjustment for Egocentric Moving Distance between Real Space and Virtual Space with See-closed-type HMD (Topic: Interaction)

Summary: This paper presents a study of egocentric distance perception matching between real space and see-closed type HMD based virtual space. We need a calibration technique that reflects these characteristics when implementing virtual interaction directly tied to real-world space. This study experimentally analyzes the phenomenon in which the distance perceived by the user decreases when they are in HMD environment. Then, we provide a correction algorithm that allows users to maintain the distance perception sensed in the real space in the virtual space.

Author(s): Ungyeon Yang, ETRI

Nam-Gyu Kim, Dong-Eui university

Ki-Hong Kim, ETRI

Speaker(s): Ungyeon Yang, ETRI

Prototyping Digital Signage Systems with High-Low Tech Interfaces (Topic: Interaction)

Summary: We prototyped interactive digital signage systems with custom-made physical interfaces to investigate how the addition of physical interfaces extends the design space. While our investigation is still in a preliminary phase and the prototypes just simply playback video files, being triggered by the user's action made to the interface, the addition of physical interfaces seems to provide an interesting design space; the addition can be not just helpful for an intuitive interaction but also beneficial as a mean of creative expressions for better message delivery by digital signage.

Author(s): Ting-Wen Chin, Chang Gung University

Yu-Yen Chuang, Chang Gung University

Yu-Ling Fang, Chang Gung University

Yi-Ning Jiang, Chang Gung University

Yi-Ching Kang, Chang Gung University

Wei-Hsin Kuo, Chang Gung University

Tzy-Wen To, Chang Gung University

Hiroki Nishino, Chang Gung University

Speaker(s): Ting-Wen Chin Yu-Yen Chuang Yu-Ling Fan Ye-Ning Jiang Yi-Ching Kang Wei-Hsin Kuo Tzu-Wen To Hiroki Nishino, Department of Industrial Design, College of Management, Chang Gung University, Taiwan

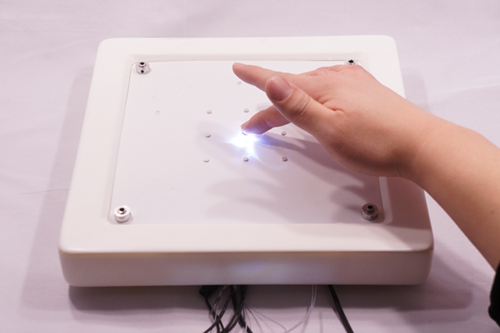

Spin and Roll: Convex Solids of Revolution as Playful Interface (Topic: Interaction)

Summary: We present an interactive system that uses convex solids of revolution as playful interface manipulated on tabletop display. The interaction is based on the unique manipulations such as rolling and spinning, as well as the general ones of pointing and dragging. Touching information of the solids on the display is detected by a sensor-sheet and is utilized for applications in real time. To show the feasibility and effectiveness of the system, we developed some contents such as a draw tool and some gaming applications that make use of the typical movements of the solids.

Author(s): Akihiro Matsuura, Tokyo Denki University

Yuma Ikawa, Tokyo Denki University

Yuka Takahashi, Outsourcing Technology Inc.

Hiroki Tone, Tokyo Denki University

Speaker(s): Akihiro Matsuura Yuma Ikawa, Tokyo Denki University

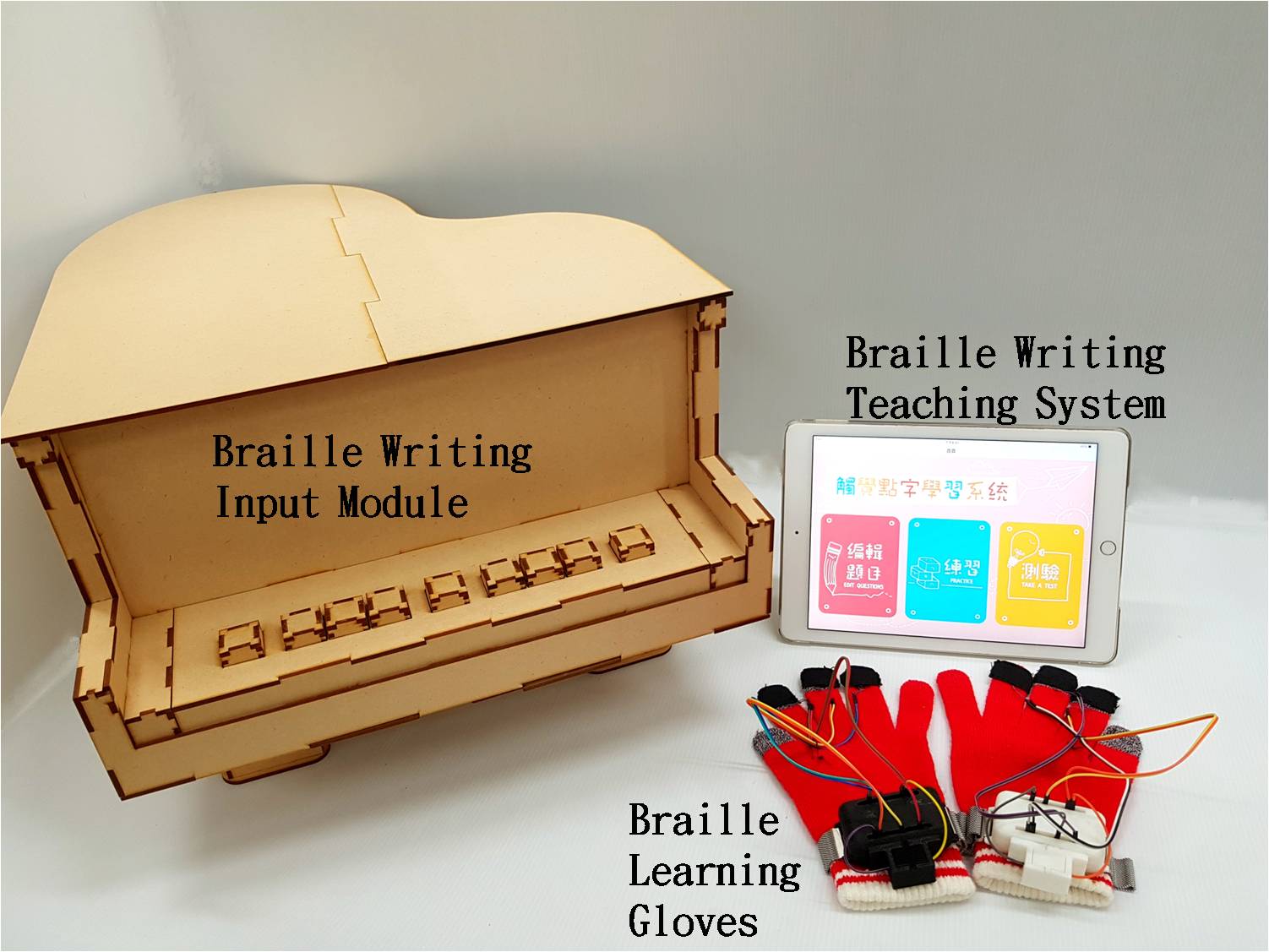

Tactile Braille Learning System to Assist Visual Impaired Users to Learn Taiwanese Braille (Topic: Interaction)

Summary: In this study we designed a system to assist people with visual impairments to learn Taiwanese Braille writing skills, named the tactile Braille learning system. This system design is based on the principle of simple operation and uses the learning concept of muscle memory. They need only carry Braille learning gloves, a tablet computer, and earphones and can proceed with everyday tasks as they usually do. During spare time, such as when they are waiting for the bus, taking the subway, and walking, this system enables visual impaired users to more effectively learn Taiwanese Braille writing skill.

Author(s): Tao-Jen Yang, Tamkang University

Wei-An Chen, Tamkang University

Yung-Long Chu, Tamkang University

Zi-Xin You, Tamkang University

Chien-Hsing Chou, Tamkang University

Speaker(s): Tao-Jen Yang, Wei-An Chen, Yung-Long Chu, Department of Electrical and Computer Engineering, Tamkang University

User Interface Applications in Desktop VR using a Mirror Metaphor (Topic: Interaction)

Summary: The main objective of this research work is to create a desktop VR environment to allow users to interact naturally with virtual objects positioned both in front and behind a screen. We propose a mirror metaphor that simulates a physical stereoscopic screen with the properties of a mirror. In addition to allowing users to interact with virtual objects positioned in front of a stereoscopic screen using virtual hands, the virtual hands can be transferred inside a virtual mirror and interact with objects behind the screen. When the virtual hands are operating inside the virtual mirror, they become like the reflection in a real mirror. We present application scenarios that would make use of the expanded interactable space in a desktop VR environment created by the proposed mirror metaphor.

Author(s): Santawat Thanyadit, Department of Computer Science and Engineering, Hong Kong University of Science and Technology

Ting-Chuen Pong, Hong Kong University of Science and Technology

Speaker(s): Santawat Thanyadit, Hong Kong University of Science and Technology

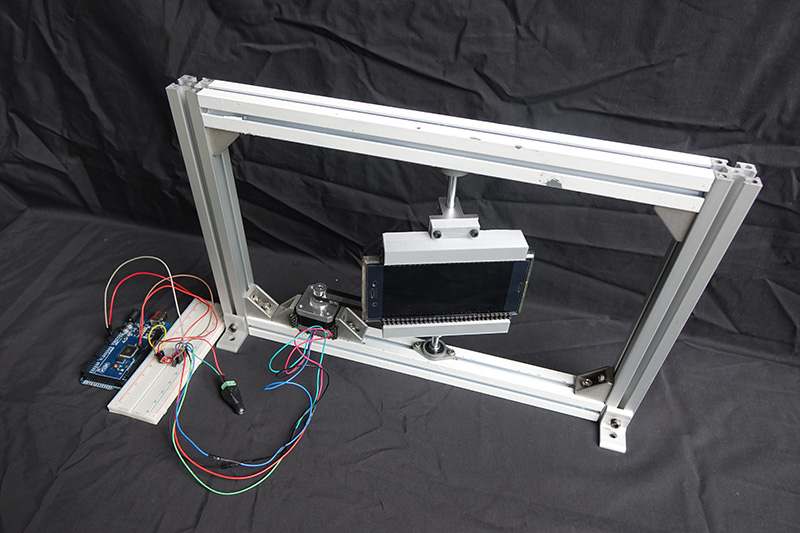

Affordable System for Measuring Motion-to-Photon Latency of Virtual Reality in Mobile Devices (Topic: Methods and Applications)

Summary: Recently, virtual reality on the mobile phone is getting more and more popular. In virtual reality, motion-to-photon latency is a very important element which causes simulator sickness. In this paper, we construct a low-cost system with high accuracy which can precisely measure the latency of virtual reality (VR) in the mobile device. There is no convenient way to measure the latency on mobile devices these years. So we provide a simple method to calculate the latency.

Author(s): Yu-Ju Tsai, Department of Computer Science and Information Engineering, National Taiwan University

Yu-Xiang Wang, Department of Computer Science and Information Engineering, National Taiwan University

Ming Ouhyoung, Graduate Institute of Networking and Multimedia, National Taiwan University

Speaker(s): Yu-Ju Tsai Yu-Xiang Wang, National Taiwan University

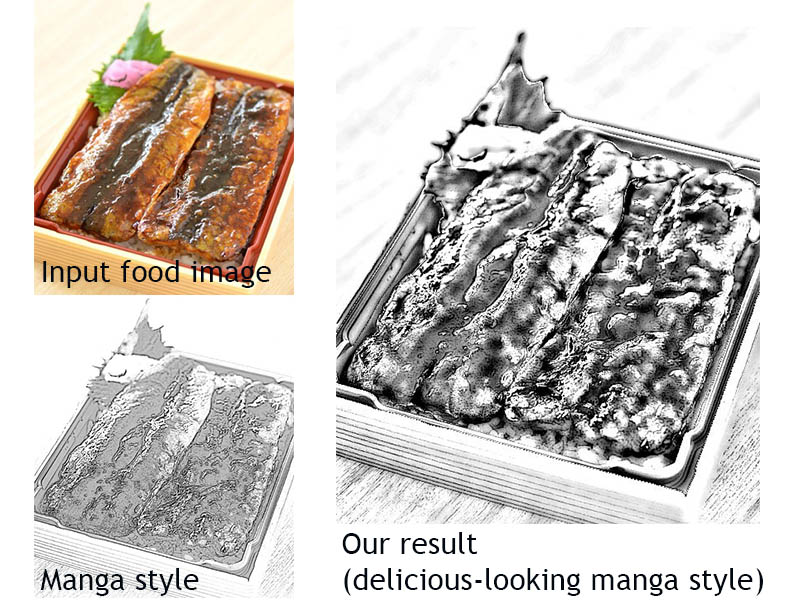

An Image Generation System of Delicious Food in a Manga Style (Topic: Methods and Applications)

Summary: In non-photorealistic rendering, preserving material appearance is challenging in terms of human sensitivity. Foods in manga are one of such topics. While the types of images presented in manga can range from realistic to deformed, foods are generally drawn as realistic, delicious-looking images. However, drawing such pictures requires skill. Thus, we propose a system that emphasizes the gloss of wet and oily foods to generate delicious food pictures in a manga style.

Author(s): Yuki Morimoto, Kyushu University

Sakiko Fujieda, Shibaura Institute of Technology

Kazuo Ohzeki, Shibaura Institute of Technology

Speaker(s): Sakiko Fujieda, Yuki Morimoto, Shibaura institute technology, Kyushu university

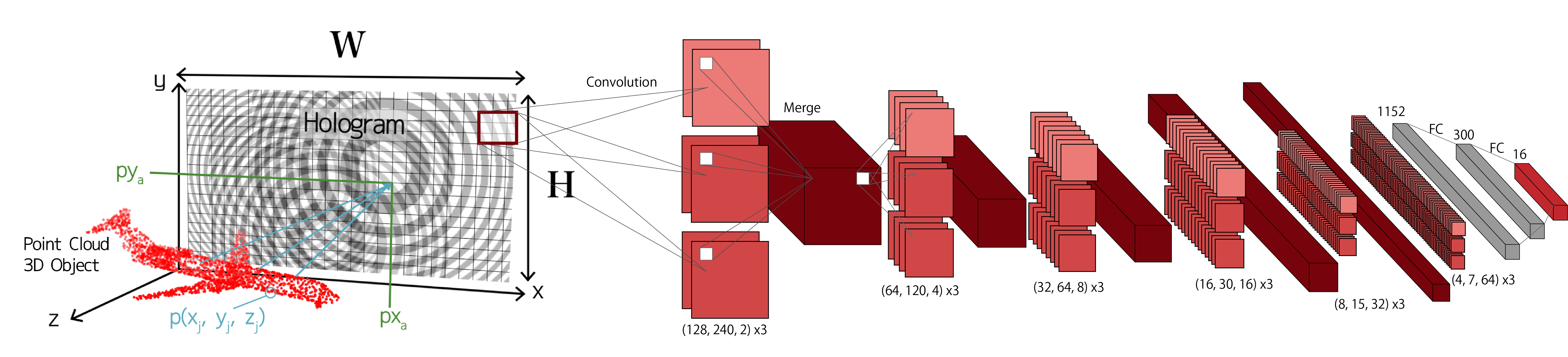

DeepHolo: Recognizing 3D Objects using a Binary-weighted Computer-Generated Hologram (Topic: Methods and Applications)

Summary: Three-dimensions (3D) models contain wealth of information about every object in our environment. However, it is difficult to semantically recognize the media forms, even when they feature simply form objects. We propose a DeepHolo network using binary-weighted computer-generated hologram (CGH) from point cloud models. This neural network facilitates manipulation 3D point cloud form, and allows for processing as Three-dimensions (2D) data. We construct the network using hologram data, as binary holograms are more simply and lower information volume than their point cloud data(PCD), leading to more less number of parameters. We then employ a Convolutional Neural Network to extract features by muting distracting factors. The Deep Neural Network (DNN) is trained to recognize hologram of 3D objects, so that it is close to a point attributed to a 3D model of a similar object to the one depicted in the hologram. This purifying capability of the DNN is accomplished with the help of large amount of training data consisting of holograms from PCD. DeepHolo network allows high precision object recognition, as well as processing 3D data using a little of computer resources. We evaluate our method on a recognition task and show that it is much more space efficient outperforms state-of-the-art methods.

Author(s): Naoya Muramatsu, University of Tsukuba

CW Ooi, University of Tsukuba

Yoichi Ochiai, University of Tsukuba

Yuta Itoh, University of Tsukuba

Speaker(s): Naoya Muramatsu, University of Tsukuba

Haptic Marionette: Wrist Control Technology Combined with Electrical Muscle Stimulation and Hanger Reflex (Topic: Methods and Applications)

Summary: Many devices and systems that directly control a user's hands have been proposed in previous studies. As a method for controlling a user's wrist, Hanger Reflex and Electrical Muscle Stimulation is often used. We propose a method combined Electrical muscle stimulation and Hanger Reflex. We use Hanger Reflex to elicit the supination and pronation, and EMS to cause the flexion and extension. We believe that the proposed method of this study contributes to the exploration of new devices and applications on the fields of haptics, virtual and augmented reality, mobile and wearable interfaces.

Author(s): Mose Sakashita, University of Tsukuba

Yuta Sato, University of Tsukuba

Ayaka Ebisu, University of Tsukuba

Keisuke Kawahara, University of Tsukuba

Satoshi Hashizume, University of Tsukuba

Naoya Muramatsu, University of Tsukuba

Yoichi Ochiai, University of Tsukuba

Speaker(s): Satoshi Hashizume, University of Tsukuba

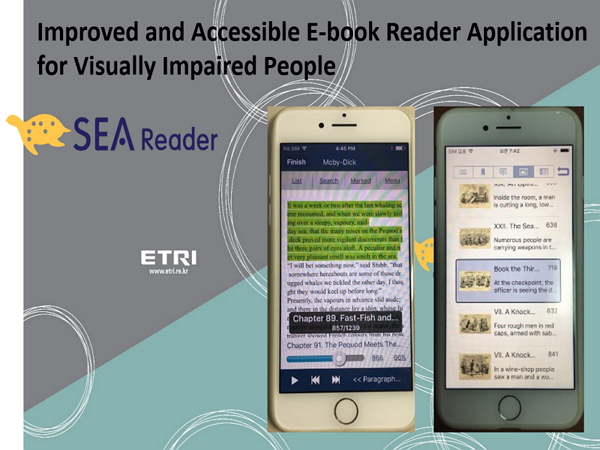

Improved and Accessible E-book Reader Application for Visually Impaired People (Topic: Methods and Applications)

Summary: This paper presents a study of an accessible e-book reader application for visually impaired people. We interviewed 27 visually impaired people to understand their usage patterns of e-books and user requirements in terms of functions and interface of an e-book reader application. Based on this survey, we were able to establish the basic direction of development of our e-book reader application; we implemented the first version of the e-book reader focusing on basic functionality. This version of the e-book reader obtained a value of user satisfaction of more than 75% in the usability test. We are continuing to develop the next version of this e-book reader with differentiated functions for reading professional books that include equations, tables, graphs, and so on. In addition, we are considering supporting a simple and fast input method and providing personalized UI. Beyond e-book readers, we hope that our study will be useful when designing and developing various mobile applications, considering that visually impaired users want to obtain information and experience equal to that available to the non-visually disabled.

Author(s): Hee Sook Shin, ETRI

Speaker(s): Heesook Shin, Electronics and Telecommunications Research Institute

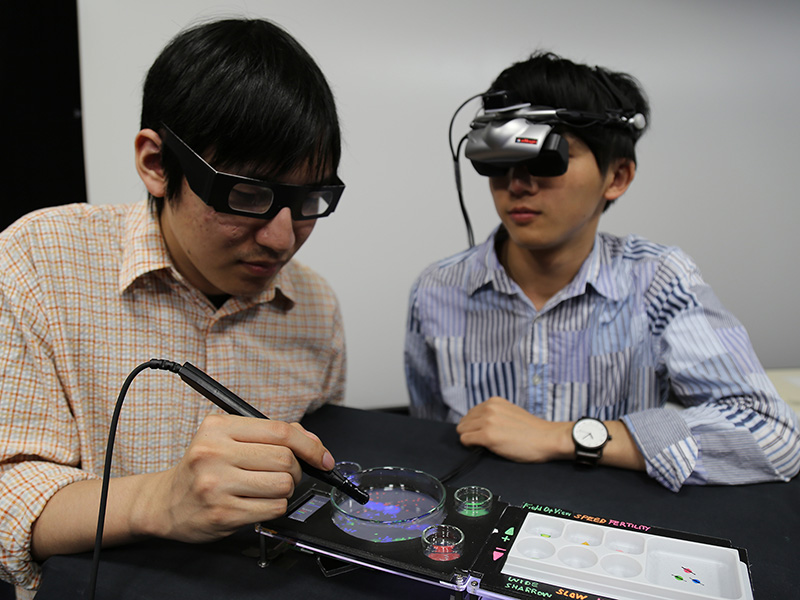

MitsuDomoe: Ecosystem Simulator of Virtual Creatures in Mixed Reality Petri Dish (Topic: Methods and Applications)

Summary: This project introduce a new tool for science education by using mixed reality. Our objective is to create a new technical platform for augmenting the creative experience of users in science education. We developed a virtual ecosystem simulator with physical mixed reality interface. Users can manipulate the computed ecosystem, and/or test their own simulation models again and again, without losing real lifeforms. Users interact with the ecosystem via the petri dish displays and a pipette-like device. The physical MR interface can provide users with the sense and enjoyment of performing experiments in a laboratory. The ecosystem model consists of three species of primitive artificial creatures and simulates the predation cycle of these virtual species in the petri dish device. The proposed simulation model assumes a symmetric and cyclical relation between three species that have essentially the same functions. In this scheme, the species have a predator-prey relation, but each species can behave as both predator and prey. By tuning the factors of the model, species can be changed to exhibit offensive or defensive behaviors. User can control the factors by dropping virtual chemical liquid from the palette into the petri dishes. In this system, there are two types of user interface for participating in the experience, the physical MR and immersive VR interfaces. With the VR interface, users can also experience immersive observation. Users can join the world from both of inside and outside. They can discuss and share the experience from each viewpoint. We expect this MitsuDomoe system prototype to serve as a new technical platform for augmenting the creative experience of users in science education.

Author(s): Toshikazu Ohshima, College Of Image Arts And Sciences, Ritsumeikan University

Ren Sakamoto, College Of Image Arts And Sciences, Ritsumeikan University

Speaker(s): Toshikazu Ohshima, Ritsumeikan University

Silk Fabricator: Using Silkworms as 3D Printers (Topic: Methods and Applications)

Summary: This study proposes new method of computational fabrication by utilizing living silkworms as fabrication tools towards building free-form 3D sheets of silk. These silk sheets have the flexible structure and they are applicable to various situations. We built the digital fabrication system to design 3D structures by silk sheets.

Author(s): Riku Iwasaki, University of Tsukuba, Digital Nature Group

Yuta Sato, University of Tsukuba

Suzuki Ippei, Digital Nature Group

Youichi Ochiai, University of Tsukuba

Atushi Shinodo, Digital Nature Group

Kenta Yamamoto, University of Tsukuba

Kohei Ogawa, University of Tsukuba

Speaker(s): Riku Iwasaki, Digital Nature Group (university of Tsukuba)

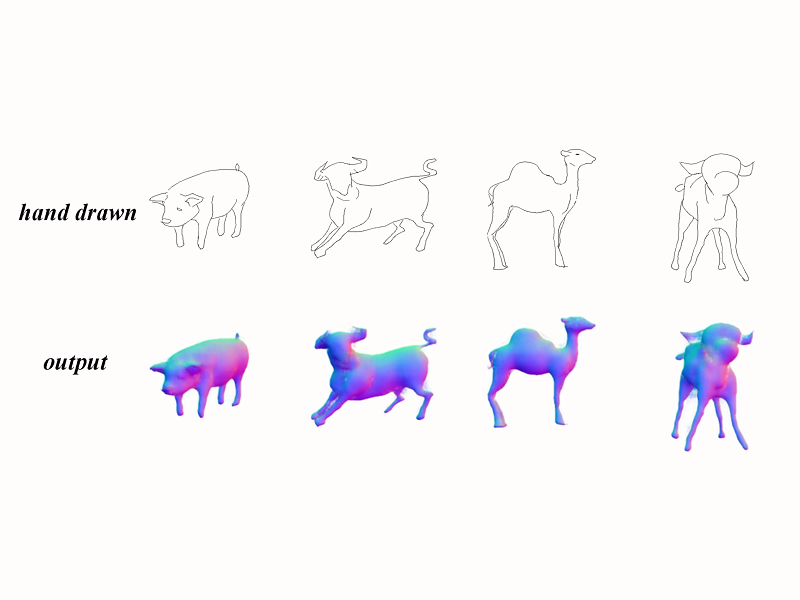

Sketch2Normal: Deep Networks for Normal Map Generation (Topic: Methods and Applications)

Summary: We introduce a novel method inferring normal maps from sketches with the conditional WGAN and user guidance. We treat the sketch-to-normal map generation problem as an image translation problem, utilizing a conditional GAN-based framework to "translate" a sketch image into a normal map image. Our method shows superb performances than the previous approaches in both qualitative and quantitative way.

Author(s): Wanchao SU, School of Creative Media, City University of Hong Kong

Xin YANG, Dalian University of Technology

Hongbo FU, School of Creative Media, City University of Hong Kong

Speaker(s): Wanchao Su, School of Creative Media, City University of Hong Kong

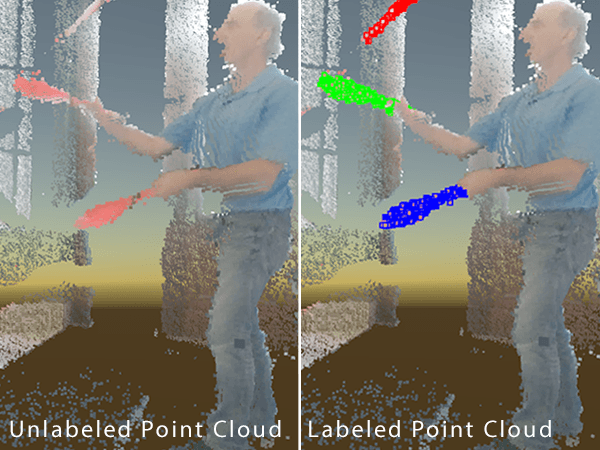

Visualization and Labeling of Point Clouds in Virtual Reality (Topic: Methods and Applications)

Summary: This is a Virtual Reality application for visualizing and labeling point cloud data sets. A series of point clouds are recorded as a video sequence and frames can be played, paused and skipped just like in a video player. The user can walk around and inspect the data, and using a hand-held controller select and label individual parts of the point cloud. The application enables a fast and intuitive way to produce labeled point cloud sets potentially used as training data for classification algoritms.

Author(s): Jonathan Stets, Technical University of Denmark

Yongbin Sun, Massachusetts Institute of Technology (MIT)

Wiley Corning, MIT Media Lab

Scott Greenwald, MIT Media Lab

Speaker(s): Jonathan Dyssel Stets, Technical University of Denmark

Bidirectional Pyramid-based PMVS with Automatic Sky Masking (Topic: Modeling)

Summary: In this paper, we propose an improved method for generating a dense point cloud from aerial images captured by a UAV camera. The proposed method is based on a PMVS approach and generates a dense 3D points using a bidirectional pyramid images. This approach is suitable for the characteristics of the aerial image set when the distance between the camera and the subject is large. In addition, the proposed method uses an automatic sky masking technique to generate a clean reconstruction result free of noise components in the sky area.

Author(s): Jungjae Yu, ETRI

Chang-Joon Park, ETRI

Speaker(s): Jung-Jae Yu, ETRI

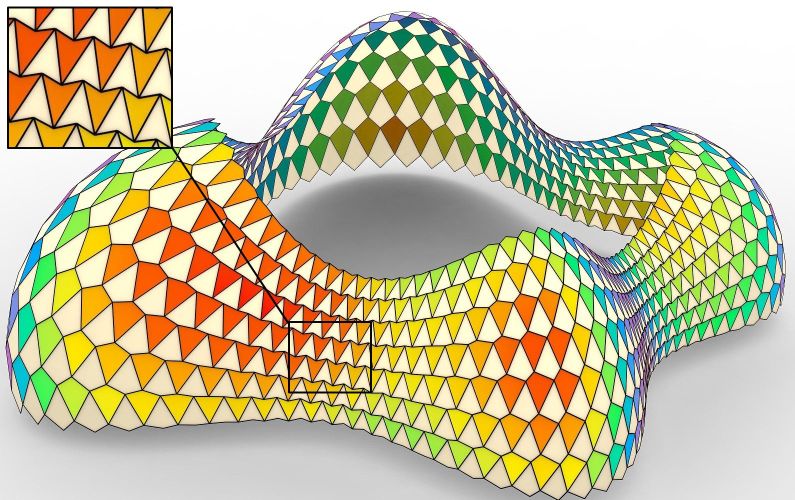

Polyhedral Meshes with Concave Faces (Topic: Modeling)

Summary: We study the design and optimization of polygonal meshes with concave planar faces. The motivating applications of this work are architecture, product design, and art. To discretize freeform surfaces into polyhedral meshes, we propose a novel class of regularizers for mesh aesthetics based on symmetries. They are useful to generate concave polygons on negative Gaussian curvature region and provide the necessary flexibility to create smooth transformation of planar faces across the region where Gaussian curvature alternated between positive and negative.

Author(s): Caigui Jiang, Max Planck Institute for Informatics

Renjie Chen, Max Planck Institute for Informatics

Speaker(s): Caigui Jiang Renjie Chen, Max Planck Institute for Informatics

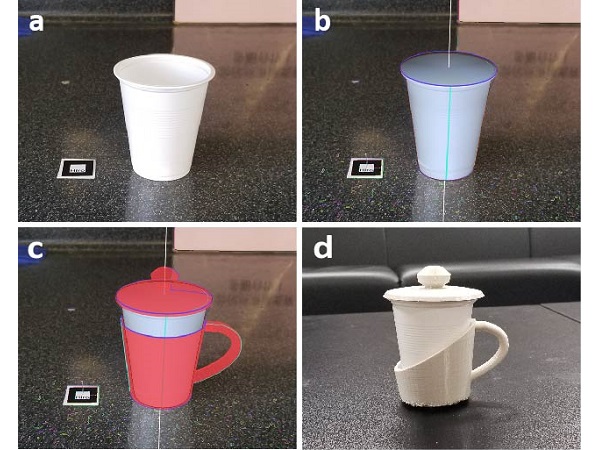

SmartSweep: Context-aware Modeling on a Single Image (Topic: Modeling)

Summary: We present an image-based tool to facilitate creating complementary parts for existing physical objects. Given a photo with limited 3D information provided by an AR marker, our system enables users to reconstruct the shape of existing object interactively and then utilizes its features as context to augment the modeling procedure. This is achieved by a few flexible context-aware operations of our system. We demonstrate its effectiveness through some fabricated examples.

Author(s): Yilan Chen, City University of Hong Kong

Wenlong Meng, Ningbo University

Shiqing Xin, Ningbo University

Hongbo Fu, CIty University of Hong Kong

Speaker(s): Yilan Chen, City University of Hong Kong

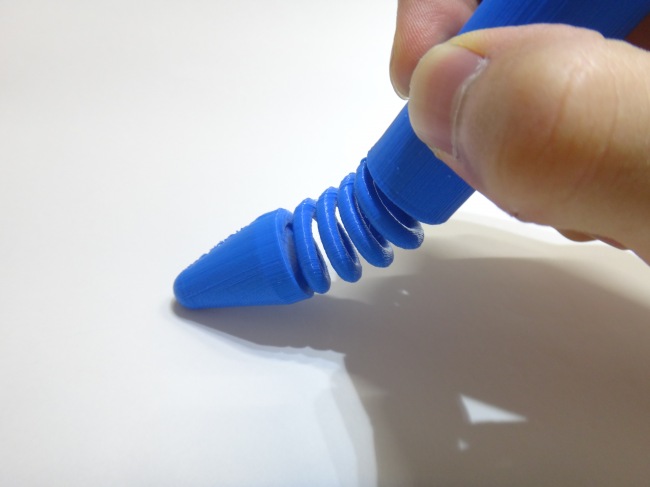

Spring-Pen: Reproduction of any Softness with the 3D Printed Spring (Topic: Modeling)

Summary: In this paper, we present a stylus pen with a new tactile feedback in writing pictures and letters on smartphone and tablet terminal. The stylus pen uses a spring structure to reproduce the tactile sensation of the softness like the tip of a brush. In addition, the softness suitable for personal taste can be expressed because the user can adjust the thickness and the number of steps of spring coil with 3DCAD software. Moreover, it makes the feedback from the screen more realistic. It helps expression in our drawing pictures and letters.

Author(s): Kengo Tanaka, University of Tsukuba School of Informatics

Yoichi Ochiai, University of Tsukuba, University of Tsukuba School of Informatics, University of Tsukuba

Taisuke Ohshima, University of Tsukuba

Speaker(s): Kengo Tanaka, University of Tsukuba School of Informatics

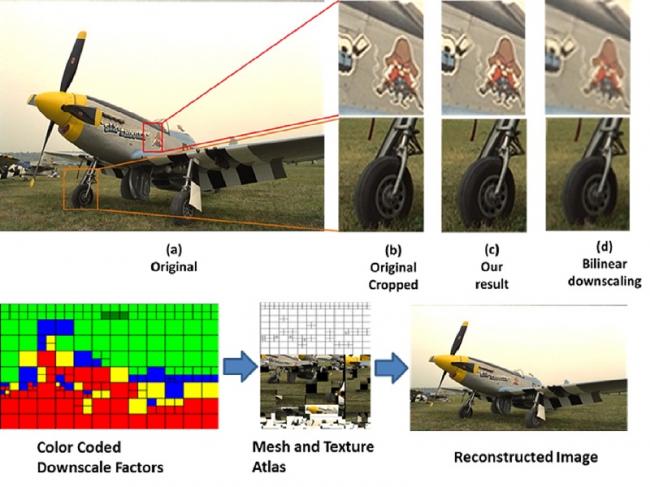

A Fast And Efficient Content Aware Downscaling Based Texture Compression Method For Mobile Devices (Topic: Multimedia)

Summary: Texture compression techniques have been of great interest especially for mobile phone industry due to the relatively smaller amount of memory available. Current texture compression encoding techniques are slow and CPU intensive, making it not suitable for on the fly encoding of uncompressed textures to compressed ones. To address this draw-back, we present a fast and effective approach to reduce the run-time memory footprint of images. We apply a compression mechanism which uses image downscaling adaptive to the image content. The compression technique does not cause any perceivable loss of quality. In our experiments we show that proposed method is on an average 6 dB higher in PSNR than Bi-linear uniform image downscaling technique at equal bits per pixel.

Author(s): Rahul Upadhyay, Samsung Research India - Bangalore, India

Ajay Surendranath, Samsung Research India - Bangalore, India

Speaker(s): Rahul Upadhyay, Samsung R&D Institute, Bangalore India

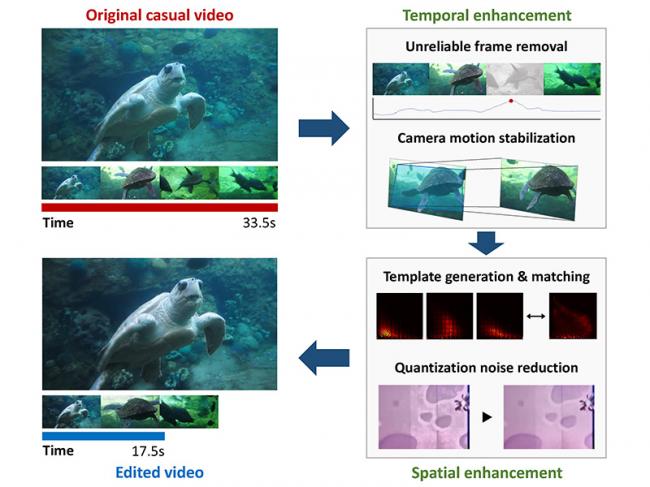

Aesthetic Temporal and Spatial Editing of Casual Videos (Topic: Multimedia)

Summary: This paper presents an automated video editing method to enhance aesthetic quality of casual videos in both temporal and spatial domains. In the temporal domain, our method divides the given video into several shots and refines the camera motion of each shot. In the spatial domain, our method adjusts the color characteristics of each shot to make them similar to those of professional videos. Results of the subjective evaluation demonstrate that the proposed method successfully improves the aesthetic quality of casual videos.

Author(s): Jun-Ho Choi, Yonsei University

Jong-Seok Lee, Yonsei University

Speaker(s): Jun-Ho Choi, Yonsei University

Automatic Generation of Visual-Textual Web Video Thumbnail (Topic: Multimedia)

Summary: Thumbnails provide an efficient way to perceive video content and give online viewers instant gratification of making relevance judgments. In this abstract, we introduced an automatic approach to generate magazine-cover-like thumbnail using the salient visual and textual metadata extracted from video. To the best of our knowledge, it is one of first attempts for automatic generating thumbnail-level visual-textual video summaries. Compared with traditional snapshot, the synthesized thumbnail is more informative and attractive, which would be helpful for online video selection.

Author(s): Baoquan Zhao, Sun Yat-Sen University

Shujin Lin, Sun Yat-sen University

Xiaonan Luo, Guilin University of Electronic Technology

Speaker(s): Baoquan Zhao, Guilin University of Electronic Technology; Sun Yat-sen University

Content-Based Measure of Image Set Diversity (Topic: Multimedia)

Summary: A novel method is presented for quantitatively measuring the diversity of image set that represents a rich visual vocabulary. We use deep learning techniques to find correlation and disparity among given photos to facilitate their embedding. We define the diversity of an image set as the extent to which the contents that are presented are different from each other from three aspects, variety, balance, and disparity, based on distance of image visual features which equals to the reciprocal of similarity. In addition, we develop as application that selecting the most diverse subset providing convenience for having a global view of the set.

Author(s): Xingjia Pan, Institute of Automation, Chinese Academy of Sciences

Juntao Ye, Institute of Automation, Chinese Academy of Sciences

Weiming Dong, Institute of Automation, Chinese Academy of Sciences

Fan Tang, Institute of Automation, Chinese Academy of Sciences

Feiyue Huang, Youtu Lab, Tencent

Xiaopeng Zhang, Institute of Automation, Chinese Academy of Sciences

Speaker(s): Xingjia Pan, NLPR, Institute of Automation, Chinese Academy of Sciences

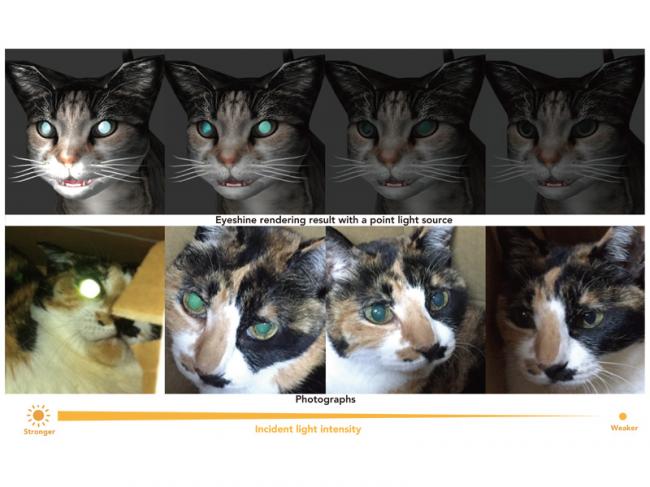

Eyeshine Rendering: A Real Time Rendering Method for Realistic Animal Eyes (Topic: Rendering)

Summary: In the eyeball of animals, there is a reflective layer called tapetum lucidum underlying the retina, which reflects the light entering the eyeball. The tapetum lucidum is necessary for animals to see objects in the dark. Animals' eyes may look shiny in the dark because the tapetum lucidum reflects the light incident on the eyeballs. As tapetum lucidum reflects light, the black eye of an animal may appear to emit light or it may appear to be a bright color. This visible effect of the tapetum lucidum is called as "eyeshine", causing the pupil to appear to glow when a light is shone into the eye of animals. However, here are many games in which animals appear, however, very few games have reproduced fairly faithfully to eyeshine. In this paper, we propose a real-time rendering method considering tapetum lucidum reflection. We aim to establish a technique to express the eyeshine of animals more realistically.

Author(s): Yuna Omae, Tokyo Denki University

Tokiichiro Takahashi, Tokyo Denki University

Speaker(s): Yuna Omae Tokiichiro Takahashi, Tokyo Denki University / Astrodesign Inc.

Importance Sampling Measured BRDFs Based on Second Order Spherical Moment (Topic: Rendering)

Summary: BRDF importance sampling, which generates sampled directions in a pattern that closely matches the BRDF, is a powerful tool for variance reduction in Monte Carlo rendering. Conventionally, it is challenging to design proper sampling patterns for measured BRDFs since no analytical expression is available directly. Although tabulation based sampling strategy provides a feasible solution to this problem, it usually requires a high memory consumption for additionally storing the importance functions. In this poster, we show that the second order spherical moment of a BRDF can be leveraged in deriving a robust sampling function for the BRDF. This sampling function has an analytical form of a GGX distribution which resembles the shape of the raw BRDF measure. Besides efficient computation and compact storage brought by the GGX distribution, another benefit is its possibility to perform sampling according to the distribution of visible normals. This can further reduce the variance especially for diffuse-like materials and improve the convergence rate of the Monte Carlo numerical integration.

Author(s): Jie Guo, Nanjing University

Yanwen Guo, Nanjing University

Jingui Pan, Nanjing University

Speaker(s): Jie Guo, Nanjing University

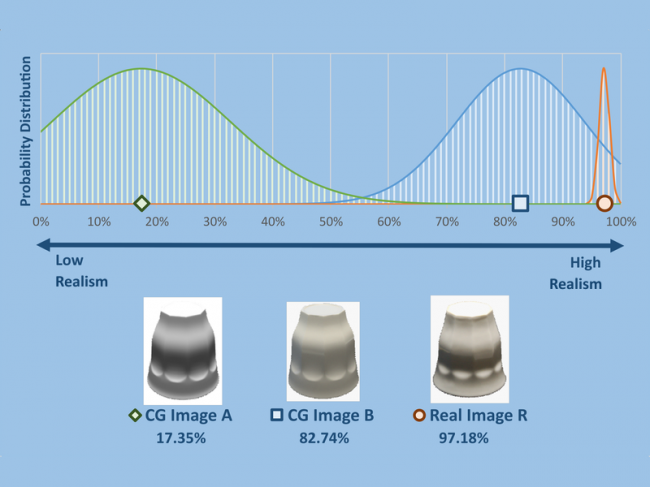

Method for Quantitative Evaluation of the Realism of CG Images Using Deep Learning (Topic: Rendering)

Summary: This paper proposes a method that enables quantitative evaluation of the degree of realism of CG images using discriminators of deep neural network.

Author(s): Masaaki Sato, Kwansei Gakuin University

Masataka Imura, Kwansei Gakuin University

Speaker(s): Masaaki Sato, Kwansei Gakuin University

Spatial Multisampling and Multipass Occlusion Testing for Screen Space Shadows (Topic: Rendering)

Summary: We present two enhancements for screen space shadowing. The first solution uses higher resolution depth buffers for multi sampling. The second algorithm uses multi pass sampling with variable step sizes.

Author(s): Stefan Seibert, Stuttgart Media University

Stefan Radicke, Stuttgart Media University

Speaker(s): Stefan Seibert, Stuttgart Media University, Germany

A Spatial User Interface Design Using Accordion Metaphor for VR Systems (Topic: Virtual Environments)

Summary: This study focuses on the design of a user interface that can be used in virtual reality applications for enhancing spatial experiences. We performed a type analysis of the various tasks that are used in social virtual reality services. Based on this analysis, we propose an accordion type of user interface suitable for application. We assume that this metaphorical accordion interface will not only provide spatial awareness in a virtual environment, but also give the user the freedom to move both hands.

Author(s): JoungHuem Kwon, Youngsan University

YoungEun Kim, Seoul Cyber University

SangHun Nam, Seoul Media Institute of Technology

Speaker(s): SangHun Nam, Seoul Media Institute of Technology

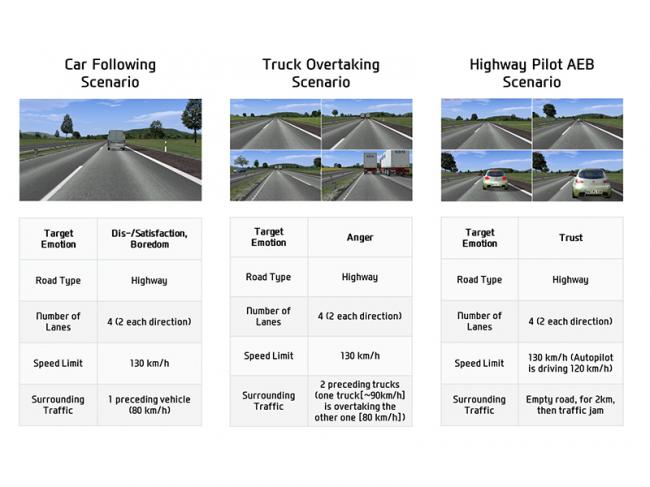

Emotion Induction in Virtual Environments: A Novel Paradigm Using Immersive Scenarios in Driving Simulator (Topic: Virtual Environments)

Summary: We propose a new emotion induction method based on immersive scenarios presented in virtual environments. The immersive scenarios presented realistic situations in a driving simulator such as following a car or overtaking a truck, and succeeded in consistently and effectively inducing a different array of emotions in the participants. Therefore, our system can be adapted to numerous different kinds of Human-Computer Interaction (HCI) scenarios where emotions play a significant role.

Author(s): Seunghyun Woo, Hyundai Motor Company

Dong-Seon Chang, Hyundai Motor Company

Daeyun An, Hyundai Motor Company

Christian Wallraven, Korea University

Speaker(s): Dong-Seon Chang, Hyundai Motor Company

Immersive VR Environment for Architectural Design Education (Topic: Virtual Environments)

Summary: We propose a integrated system to support architectural design education, in which supports multi-user discussion and 3D model editing in VR. And it includes an auxiliary log visualization system to support synchronous learning by allowing users to view the log visualization of previous discussion events and go back to some periods of the class to start an alternative direction of design discussion.

Author(s): Chia-Hung Tsou, National Chiao Tung University, Taiwan

Ting-Wei Hsu, National Chiao Tung University, Taiwan

Chun-Heng Lin, National Chiao Tung University, Taiwan

Ming-Han Tsai, National Chiao Tung University, Taiwan

Pei-Hsien Hsu, National Chiao Tung University, Taiwan

I-Chen Lin, National Chiao Tung University, Taiwan

Yu-Shuen Wang, National Chiao Tung University, Taiwan

Wen-Chieh Lin, National Chiao Tung University, Taiwan

Jung-Hong Chuang, National Chiao Tung University, Taiwan

Speaker(s): Ming-Han Tsai, National Chiao Tung University

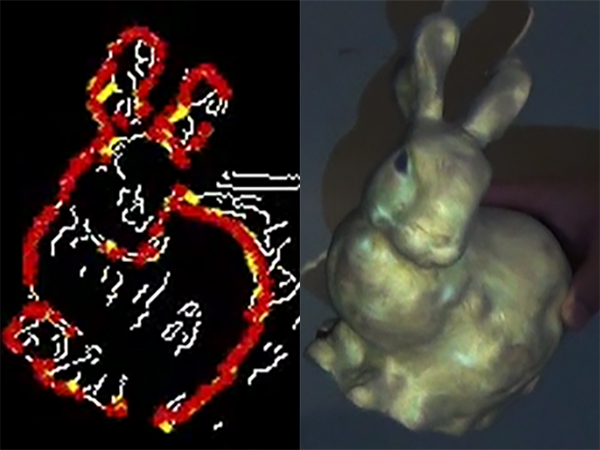

Marker-less Real-Time Tracking of Texture-less 3D objects from a Monocular Image (Topic: Virtual Environments)

Summary: We propose a method for estimating 3D posture of a texture-less object for spatial augmented reality (SAR) by using a monocular image. In recent years, SAR using image projection technology has been attracting attention. SAR is expected to become one of the next-generation AR technologies, because the image projection technique realizes a virtual object by overwriting the appearance of a real object and achieves physical interaction with them. For overwriting the real object to realize high reality, real-time posture estimation of the projection target is quite important. Because the use of sensors for the posture estimation greatly affects the appearance of the target object, non-contact measurement using a camera is desirable. However, the projection target for SAR is often texture-less in order to achieve better image projection. In addition, if the target contains texture, it is cancelled by the projection. Therefore, it is not possible to use the image feature quantities that are often used in camera-based approaches. In this study, using the contour information of the projection target, we propose a method that enables the position and the orientation estimation of a texture-less object in real time by using a monocular image.

Author(s): Yuki Morikubo, The University of Electro-Communications, Japan

Naoki Hashimoto, The University of Electro-Communications, Japan

Speaker(s): Yuki Morikubo, The University of Electro-Communications

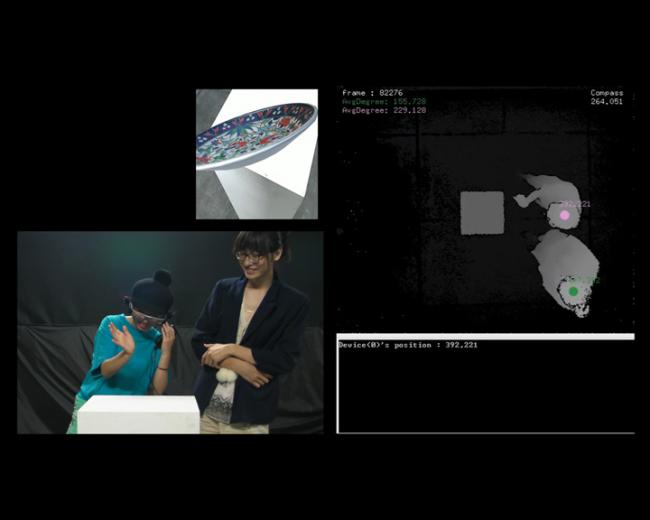

O-Displaying: An Orientation-Based Augmented Reality Display on a Smart Glass with a User Tracking from a Depth Camera (Topic: Virtual Environments)

Summary: An orientation-based (orientation sensors on a smart glass) AR smart glass displaying scheme is proposed, based on the 3D tracking capability from a Kinect to precisely display visual contents.

Author(s): Yi-Shan Lan, Taipei National University of the Arts

Shih-Wei Sun, Taipei National University of the Arts

Kai-Lung Hua, National Taiwan University of Science and Technology

Wen-Huang Cheng, Academia Sinica

Speaker(s): Yi-Shan Lan, Taipei National University of the Arts

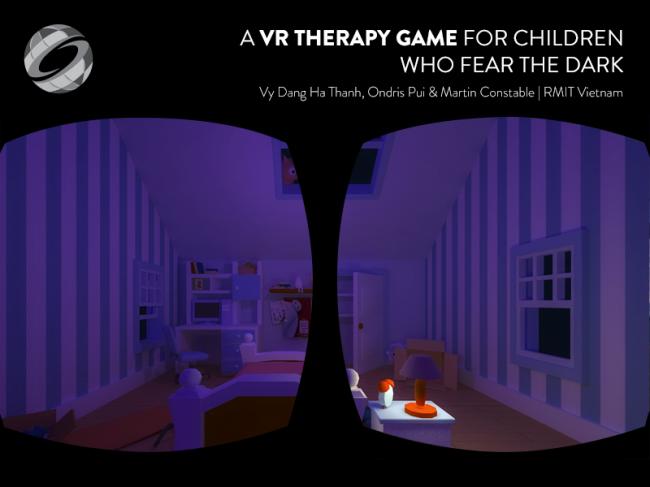

Room VR - A VR Therapy Game For Children Who Fear The Dark (Topic: Virtual Environments)

Summary: Room VR is a virtual reality exposure therapy can be used to treat rational and non-rational bedtime fears of children in a more accessible and affordable way by using smartphone and Google Cardboard platform.

Author(s): Vy Dang, RMIT University

Ondris Pui, RMIT University

Martin Constable, RMIT University

Speaker(s): Vy Dang Ha Thanh, School of Communication & Design, RMIT Vietnam, 702 Nguyen Van Linh, Ho Chi Minh, Vietnam

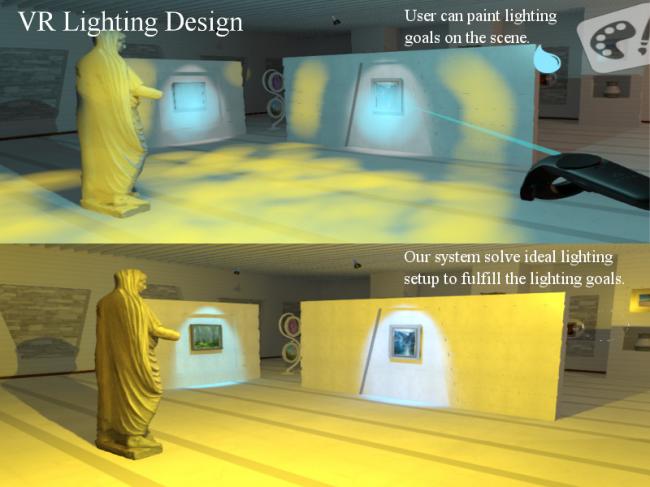

VR Lighting Design (Topic: Virtual Environments)

Summary: Lighting design is a crucial process in video games, animation, interior design, and some other fields. Current lighting design systems support only direct lighting paradigm which requires fine-tuning each light's parameters to achieve a global lighting effect. In this study, we present a hybrid lighting design interface including direct, indirect, and inverse paradigms in VR environment to cope with various design tasks and scenarios. The system provides designers with an immersive, intuitive, and effective way of lighting design.

Author(s): Chi-Yang Lee, National Chiao Tung University, Taiwan

Hsuan-Ming Chang, National Chiao Tung University, Taiwan

Chun-Heng Lin, National Chiao Tung University, Taiwan

Ming-Han Tsai, National Chiao Tung University, Taiwan

Wen-Chieh Lin, National Chiao Tung University, Taiwan

Pei-Hsien Hsu, National Chiao Tung University, Taiwan

I-Chen Lin, National Chiao Tung University, Taiwan

Yu-Shuen Wang, National Chiao Tung University, Taiwan

Jung-Hong Chuang, National Chiao Tung University, Taiwan

Speaker(s): Ming-Han Tsai, National Chiao Tung University

Data Jalebi Bot (Topic: Visualization)

Summary: An exploration into consumption of data, led to data visualization using edible materials to create data sculptures that are literally quite consumable. The data, a reflection of a quick summary of a professional profile, is provided by an individual to a custom software. Based on this data, the software generates a visualization for their profile, finally rendered as a Jalebi (pronounced juh-lay-bee), a sweet popular in India that is created by a process resembling food printing.

Author(s): Gaurav Patekar, Imaginea Design Labs, Hyderabad, India

Karan Dudeja, Globant India Pvt. Ltd.

Speaker(s): Karan Dudeja, Gaurav Patekar , Globant India Pvt. Ltd., Imaginea Design

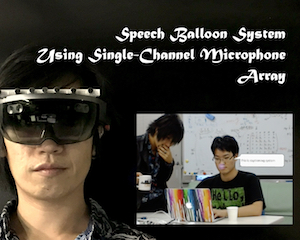

Speech Balloon System Using Single-Channel Microphone Array on See-Through Head-Mounted Display (Topic: Visualization)

Summary: We developed a portable speech balloon support system consisting of a see-through HMD, a microphone array, and a speech recognition system with the aim of this work is to visualize the conversation information for person with hearing impairment. Through some experiments, the speech recognition rate was evaluated under reverberation environment. As a result, it was confirmed that the improvement of the speech recognition rate was realized under actual environment noise through the proposed method for emphasizing of a target signal.

Author(s): Keiichi ZEMPO, University of Tsukuba

Tomoki Kurahashi, University of Tsukuba

Koichi Mizutani, University of Tsukuba

Naoto Wakatsuki, University of Tsukuba

Speaker(s): Keiichi Zempo, University of Tsukuba

Water apart: A Substantial Display based on Material property of Water (Topic: Visualization)

Summary: A substantial display based on water droplet using touch sensing technology that realize transmogrifing water material property, shape and volume, position of water droplet into sound

Author(s): Yasuhito Hashiba, University of, University of Tsukuba