siggraph

siggraph

siggraph

siggraph

VR and AR for Healthcare

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass Basic Conference Pass

Basic Conference Pass Experience Pass

Experience Pass Exhibitor Pass

Exhibitor Pass

Date/Time: 28 November 2017, 09:00am - 10:45am

Venue: MR 212&213

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

VR and AR for Immersive Health Care

Summary: VR and AR offer unique capabilities for health related research and treatment, not only because it allows interactive, multisensory, immersive environments tailored to a patient's needs, but also because it allows clinicians to control, document, and measure patient responses. A notable example is "Bravemind", a VR exposure therapy tool used to assess and treat Posttraumatic Stress (PTSD). VR and AR can be combined with virtual human technology to create powerful experiences. Virtual humans, autonomous agents who interact with real humans both verbally and non-verbally, can benefit the medical field in numerous areas, including training and education. In this session, examples will be discussed in terms of their design, development and effectiveness.

Speaker(s): Arno Hartholt, University of Southern California Arno Hartholt is the project leader of the Integrated Virtual Humans group and of the central ICT Art Group. As such, he bears responsibility for much of the technology, art, processes and procedures related to virtual humans. These include the integrated research prototype SASO, the mixed-reality Gunslinger project, and the Virtual Human Toolkit, which is freely available to the research community. He is also involved in a variety of other projects, including SimCoach, INOTS/ELITE, NSF Museum Twins, RobotCoach, Bravemind and Strive. Hartholt studied computer science at the University of Twente in the Netherlands where he earned both bachelor's and master's degrees. He was one of a select few to receive a prestigious Fortis IT Student scholarship. He first came into contact with ICT in 2003 as part of his computer science internship program, resulting in a paper presented at the Workshop on Affective Dialogue Systems in Germany, 2004. After graduating, Hartholt worked as a project coordinator at the quickly expanding Sqills IT Revolutions. There, he led a team of designers and programmers, developing an online self-service ticketing system for the Dutch and Belgian International Railway Companies. Hartholt returned to a life of science when accepting a position at ICT in late 2005. As one of the main integration software engineers within the virtual humans project, Hartholt developed a variety of technologies, with a focus on task modeling, natural language processing and knowledge representation.

Virtual Adult Experiences for the Physically Disabled

Summary: Explore the surprising ways Adult VR Experiences can have therapeutic effects for the physically and mentally disabled. Examine how to provide different control schemes and room-scale experiences, tailored to various demographics. Additionally, we'll discuss the future of VR Adult experiences and what that means for developers, and users alike.

Speaker(s): Nick Dodge, VReleased

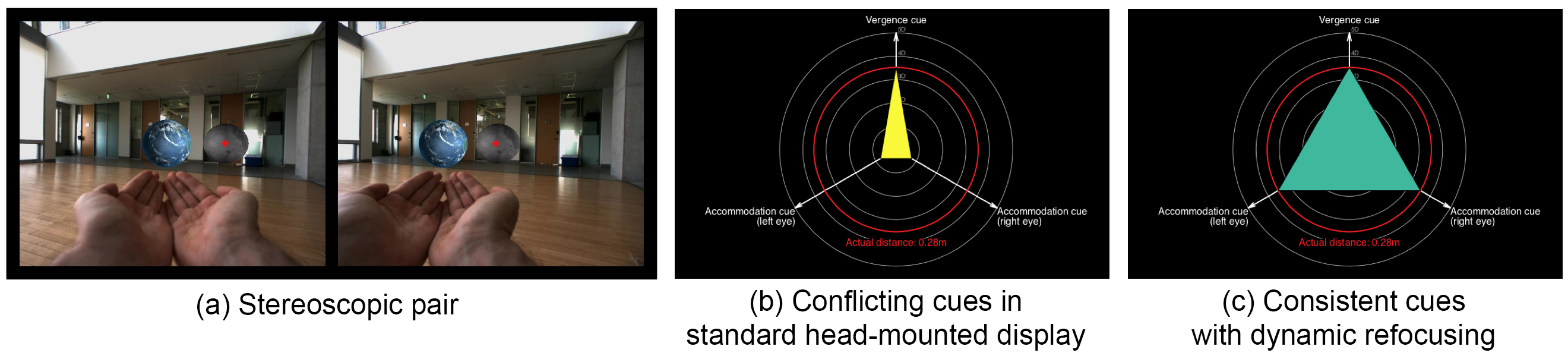

Correcting Focus for Virtual Reality

Summary: All the commercial VR headsets available today are fundamentally flawed: they trigger sensory conflicts which lead to severe visual discomfort. By design, they rely on a stereoscopic near-eye display which presents a distinct image to the left eye and the right eye, inducing vergence eye movements which provide a sense of depth to the user. However, they fail to generate accommodation cues consistent with the vergence. Vision Science studies suggest that the arising vergence-accommodation conflict is directly responsible for some of the VR side effects, such as visual fatigue, headaches, and loss of depth perception. In this talk, we review challenges in solving the vergence-accommodation conflict and correcting refractive errors in head-mounted displays. We describe the key components required for a working solution, and focus on varifocal approaches which dynamically adjust the plane of focus inside the head-mounted display to trigger accommodation. We share preliminary study results which evaluate the influence of varifocal approaches for generating natural accommodation responses, reducing visual discomfort, and improving depth perception. The ability to use a Virtual Reality headset for extended periods with reduced visual fatigue opens up new applications beyond gaming and entertainment, such as in education and training, especially in the field of healthcare and medicine. These applications include virtual surgical planning and training, post-traumatic rehabilitation, meditation and pain management.

Speaker(s): Pierre-Yves Laffont, Lemnis Technologies Pierre-Yves Laffont is the CEO and co-founder of Lemnis Technologies, a deep tech startup based in Singapore. He holds a PhD from INRIA, was a postdoctoral researcher at Brown University and ETH Zurich, and visited MIT, UC Berkeley, KAIST and NTU. His core research expertise spans the fields of Computer Vision and Computer Graphics. His recent work focuses on computational approaches to address unsolved challenges in ophthalmic optics and human vision. Ali Hasnain, Lemnis Technologies Ali Hasnain is the COO and co-founder of Lemnis Technologies. He completed his PhD and postdoc from National University of Singapore in optical bioimaging. He developed an optical imaging technique for diagnosing neurodegenerative diseases for which he won a thesis award. He has worked on various multidisciplinary projects in industry and academia including robotics, telemedicine and non-invasive imaging techniques for biological tissues. His primary areas of expertise are in optics, imaging, medical devices and robotics.