siggraph

siggraph

siggraph

siggraph

Multi-View 3D

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 30 November 2017, 02:15pm - 04:00pm

Venue: Amber 3

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Johannes Kopf, Facebook Research, USA

Casual 3D Photography

Summary: We reconstruct 3D photos from casually captured cell phone or DSLR images: a panoramic, textured, normal mapped, multi-layered geometric mesh representation, that can be viewed with full binocular and motion parallax in VR, as well as with a regular mobile device or web browser.

Author(s): Peter Hedman, UCL

Rick Szeliski, Facebook

Suhib Alsisan, Facebook

Johannes Kopf, Facebook

Speaker(s): Peter Hedman, University College London

Soft 3D Reconstruction for View Synthesis

Summary: We present a novel algorithm for view synthesis that utilizes a soft 3D reconstruction to improve quality, continuity and robustness. This provides a soft, local, and filterable model of scene geometry for view synthesis, preserves depth uncertainty from 3D reconstruction, and iteratively improves depth estimation via soft visibility functions.

Author(s): Eric Penner, Google

Li Zhang, Google Inc.

Speaker(s): Eric Penner, Google Inc.

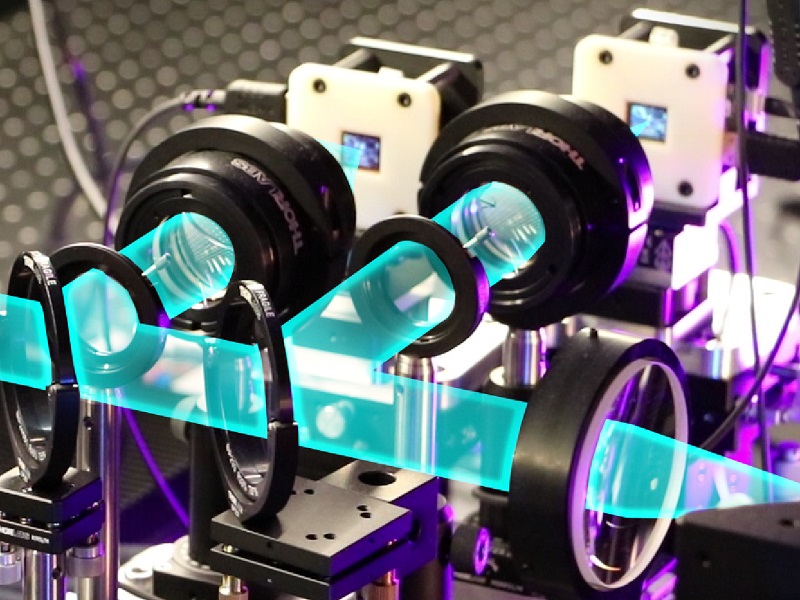

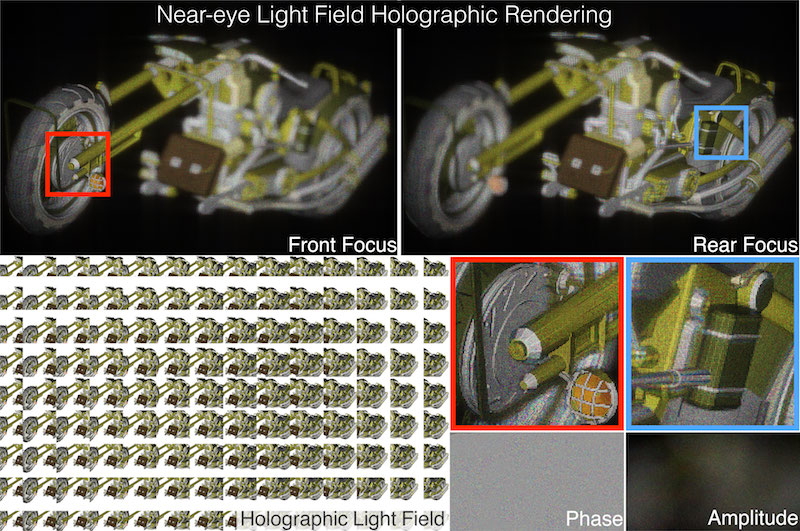

Near-eye Light Field Holographic Rendering with Spherical Waves for Wide Field of View Interactive 3D Computer Graphics

Summary: We present a light field-based CGH rendering pipeline allowing for reproduction of high-definition 3D scenes at interactive rate (4 fps) with continuous depth and support of intra-pupil view-dependent occlusion. Our rendering accurately accounts for diffraction and supports various types of reference illuminations for hologram.

Author(s): Liang Shi, NVIDIA Research, MIT CSAIL

Fu-Chung Huang, NVIDIA Research

Ward Lopes, NVIDIA Research

Wojciech Matusik, MIT CSAIL

David Luebke, NVIDIA Research

Speaker(s): Liang Shi, NVIDIA and MIT CSAIL

Fast Gaze-Contingent Optimal Decompositions for Multifocal Displays

Summary: We present an optimal scene decomposition method for multifocal displays amenable to real-time applications. We incorporate eye tracking to construct an efficient image-based deformation approach to maintain display plane alignment. We build the first binocular multifocal testbed with integrated eye tracking and accommodation measurement.

Author(s): Olivier Mercier, Oculus Research, University of Montreal

Yusufu Sulai, Oculus Research

Kevin MacKenzie, Oculus Research

Marina Zannoli, Oculus Research

James Hillis, Oculus Research

Derek Nowrouzezahrai, McGill University

Douglas Lanman, Oculus Research

Speaker(s): Olivier Mercier, University of Montreal and Oculus Research