siggraph

siggraph

siggraph

siggraph

AR / VR

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 29 November 2017, 04:15pm - 06:00pm

Venue: Amber 3

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Xin Tong, Microsoft Research Asia, China

SpinVR: Towards Live-Streaming 3D Virtual Reality Video

Summary: We present Vortex, an architecture for live-streaming 3D virtual reality video. Vortex uses two fast line sensors combined with wide-angle lenses, spinning at up to 300 rpm, to directly capture stereoscopic 360-degree virtual reality video in the widely-used omni-directional stereo (ODS) format.

Author(s): Donald Dansereau, Stanford Univeristy

Robert Konrad, Stanford Univeristy

Aniq Masood, Stanford University

Gordon Wetzstein, Stanford University

Speaker(s): Robert Konrad; Donald Dansereau, Stanford University

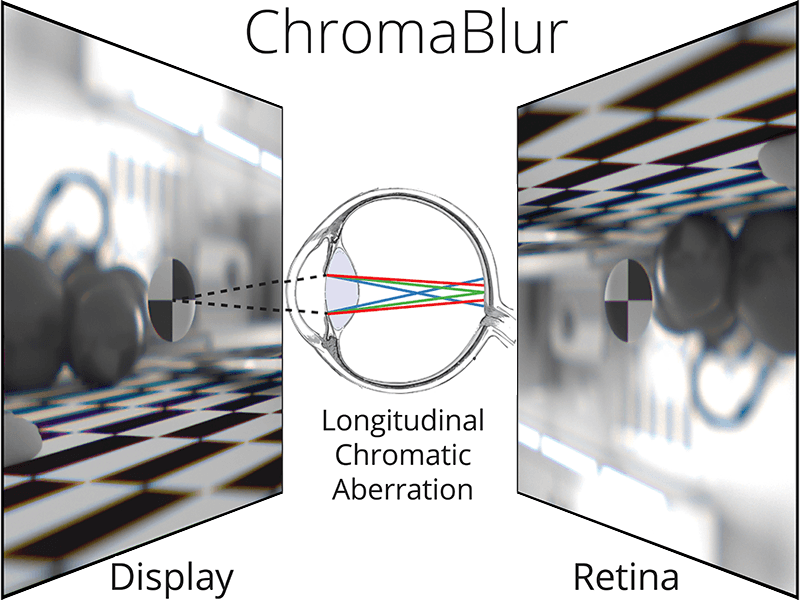

ChromaBlur: Rendering Chromatic Eye Aberration Improves Accommodation and Realism

Summary: A method to reproduce realistic depth-dependent blur. The human eye has chromatic aberration, which produces depth-dependent chromatic effects. We calculate rendered images that, when processed by the viewer's in-focus eye, will produce the correct chromatic effects on the viewer's retina for the simulated 3d scene.

Author(s): Steven Cholewiak, University of California, Berkeley

Gordon Love, Durham University

Pratul Srinivasan, University of California, Berkeley

Ren Ng, University of California, Berkeley

Marty Banks, University of California, Berkeley

Speaker(s): Steven A. Cholewiak, University of California, Berkeley

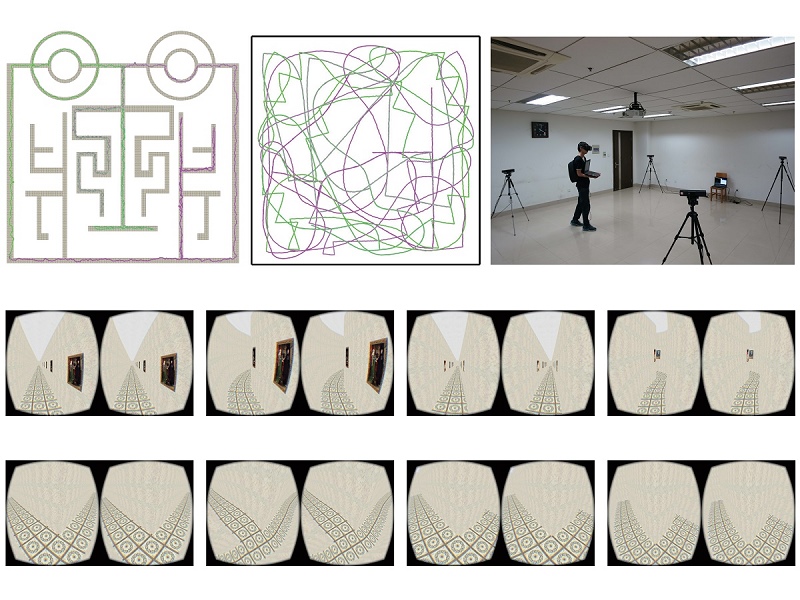

Smooth Assembled Mappings for Large-Scale Real Walking

Summary: Virtual reality applications prefer real walking to provide highly immersive presence than other locomotive methods. In this paper, we present a novel divide-and-conquer method, called smooth assembly mapping (SAM), to compute real walking mappings with low isometric distortion for large-scale virtual scenes.

Author(s): Zhichao Dong, University of Science and Technology of China

Xiaoming Fu, University of Science and Technology of China

Chi Zhang, University of Science and Technology of China

Kang Wu, University of Science and Technology of China

Ligang Liu, University of Science and Technology of China

Speaker(s): Zhi-Chao Dong, University of Science and Technology of China

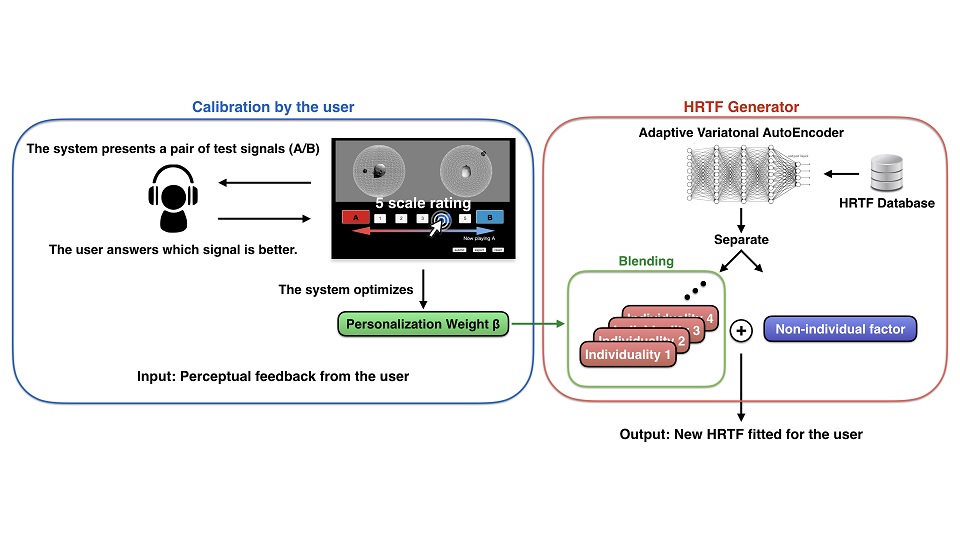

Fully Perceptual-Based 3D Spatial Sound Individualization with an Adaptive Variational AutoEncoder

Summary: We present a fully perceptual-based head related transfer function (3d audio spatialization) fitting method for individual users using an adaptive variational autoencoder. The user only needs to answer pairwise comparisons of test signals presented by the system. This reduces the efforts necessary to obtain individualized HRTFs.

Author(s): Kazuhiko Yamamoto, YAMAHA, The University of Tokyo

Takeo Igarashi, The University of Tokyo

Speaker(s): Kazuhiko Yamamoto, The University of Tokyo