siggraph

siggraph

siggraph

siggraph

Avatars and Faces

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 29 November 2017, 09:00am - 10:45am

Venue: Amber 3

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Xin Tong, Microsoft Research Asia, China

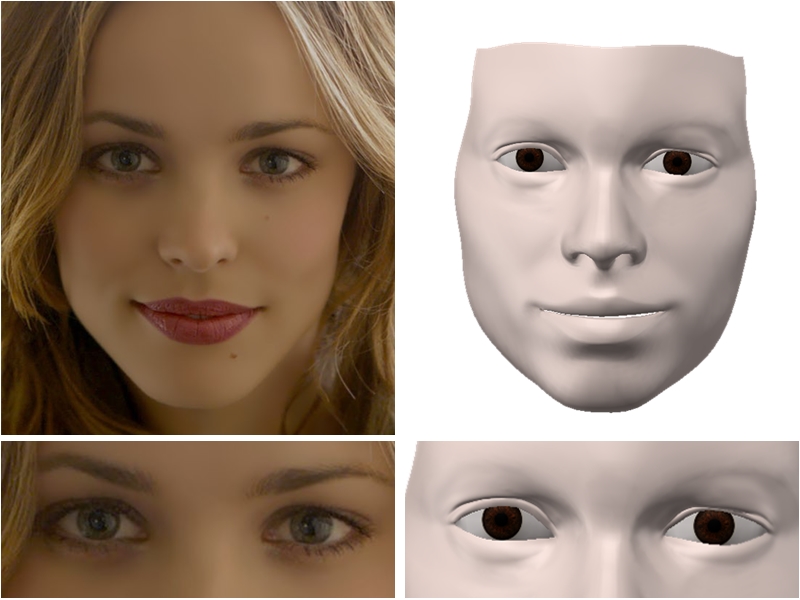

Real-time 3D Eyelids Tracking from Semantic Edges

Summary: In this paper, we propose a technique that reconstructs 3D shapes and motions of eyelids in real time. Combining with the tracked face and eyeballs, our system generates full face results with more detailed eye regions. Our technique is applicable for different human races, eyelid shapes and motions.

Author(s): Quan Wen, Software School, Tsinghua University

Feng Xu, Software School, Tsinghua University

Ming Lu, Tsinghua University

Jun-Hai Yong, Software School, Tsinghua University

Speaker(s): Quan Wen, Tsinghua University

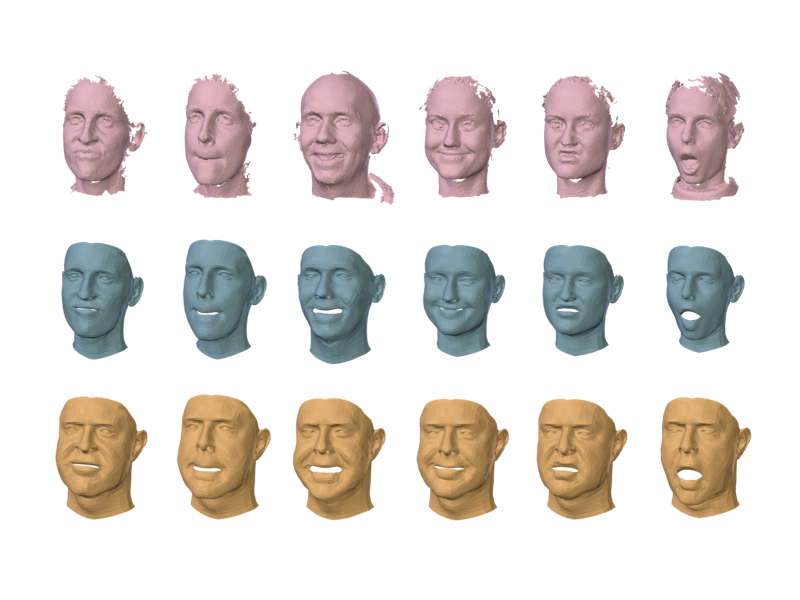

Learning a Model of Facial Shape and Expression from 4D scans

Summary: We learn a model of head shape, pose, and expression that is compact, easy to fit to data, and is much more expressive, realistic, and general than existing models of the same complexity. We train the model from thousands of people and 4D sequences and make it available for research.

Author(s): Tianye Li, Max Planck Institute for Intelligent Systems, University of Southern California

Timo Bolkart, Max Planck Institute for Intelligent Systems

Michael J. Black, Max Planck Institute for Intelligent Systems

Hao Li, Pinscreen, USC Institute for Creative Technologies, University of Southern California

Javier Romero, Max Planck Institute for Intelligent Systems, Body Labs

Speaker(s): Tianye Li, University of Southern California and Max Planck Institute for Intelligent Systems

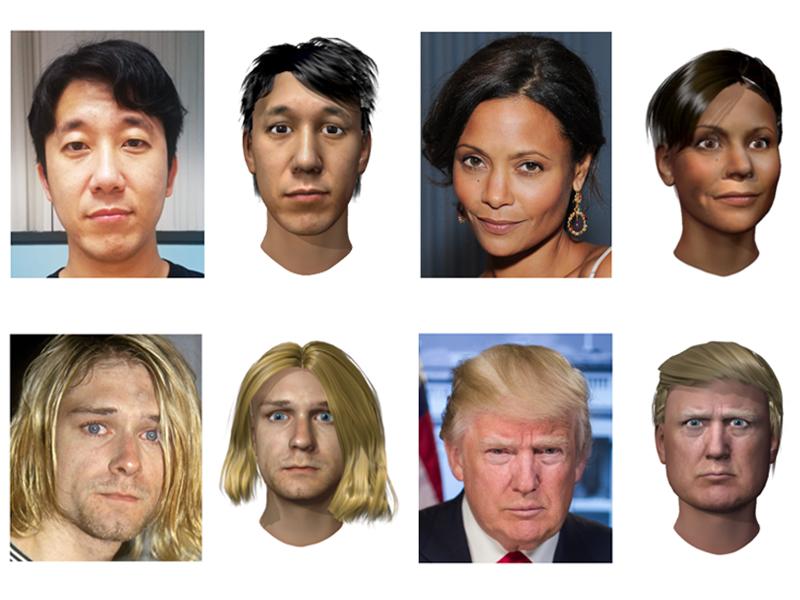

Avatar Digitization From a Single Image For Real-Time Rendering

Summary: We present a fully automatic framework that digitizes a complete 3D head with hair from a single unconstrained image. While the generated face is a high-quality textured mesh, we propose a versatile and efficient polygonal strips (polystrips) representation for the hair, compatible with existing real-time game engines.

Author(s): Liwen Hu, Pinscreen, University of Southern California

Shunsuke Saito, Pinscreen, University of Southern California

Lingyu Wei, University of Southern California, Pinscreen

Koki Nagano, Pinscreen

Jens Fursund, Pinscreen

Iman Sadeghi, Pinscreen

Jaewoo Seo, Pinscreen

Yen-Chun Chen, Pinscreen

Hao Li, USC Institute for Creative Technologies, Pinscreen, University of Southern California

Speaker(s): Liwen Hu, Pinscreen, University of Southern California

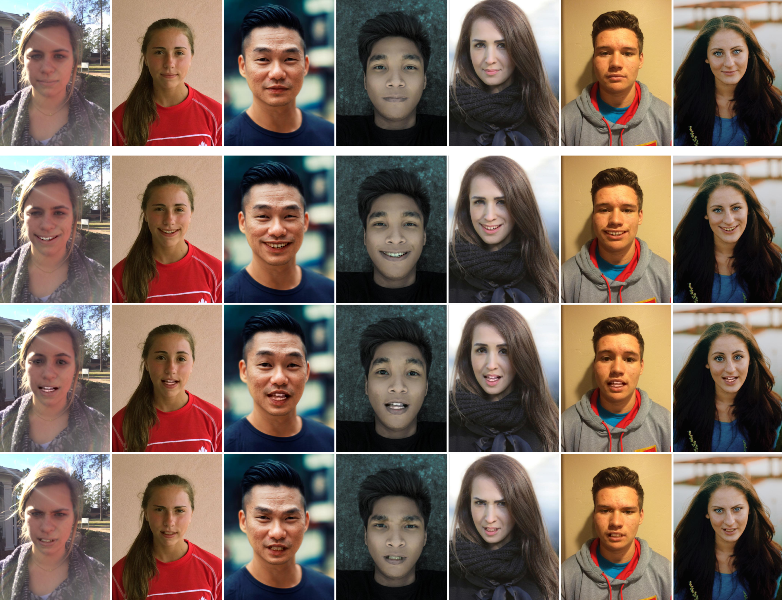

Bringing Portraits to Life

Summary: We present a technique to automatically animate a still portrait, making it possible for the subject in the photo to come to life and express various emotions.

Author(s): Hadar Averbuch-Elor, Tel Aviv University

Daniel Cohen-Or, Tel Aviv University

Johannes Kopf, Facebook

Michael Cohen, Facebook

Speaker(s): Hadar Averbuch-Elor, Tel-Aviv University