siggraph

siggraph

siggraph

siggraph

HDR and Image Manipulation

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 28 November 2017, 02:15pm - 04:00pm

Venue: Amber 3

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Min H. Kim, Korea Advanced Institute of Science and Technology (KAIST), South Korea

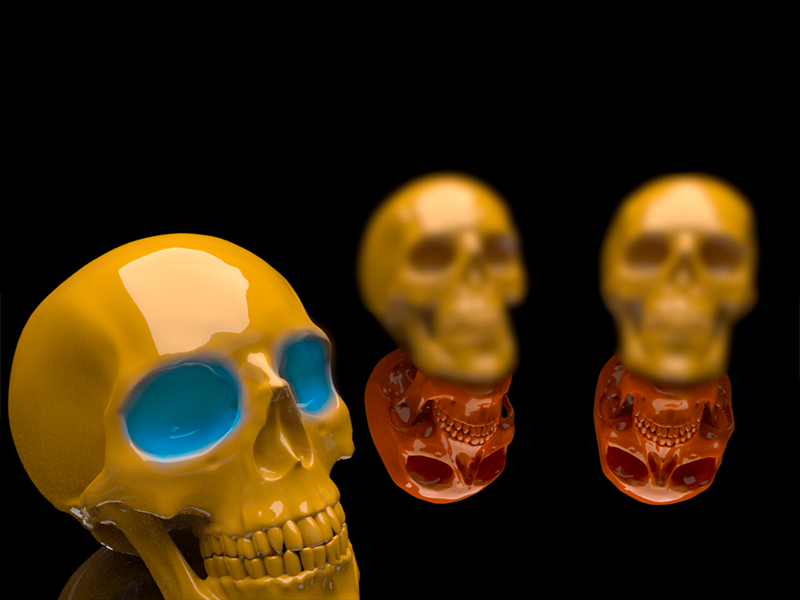

Learning to Predict Indoor Illumination from a Single Image

Summary: We propose to automatically infer omnidirectional, high dynamic range illumination from a single, limited field-of-view, low dynamic range photograph of an indoor scene. We train a deep neural network that significantly outperforms previous methods. The resulting environment maps are directly used for rendering photo-realistic objects into images.

Author(s): Marc-Andre Gardner, Université Laval

Kalyan Sunkavalli, Adobe Research

Ersin Yumer, Adobe Research

Xiaohui Shen, Adobe Research

Emiliano Gambaretto, Adobe

Christian Gagné, Université Laval

Jean-François Lalonde, Université Laval

Speaker(s): Marc-André Gardner, Université Laval

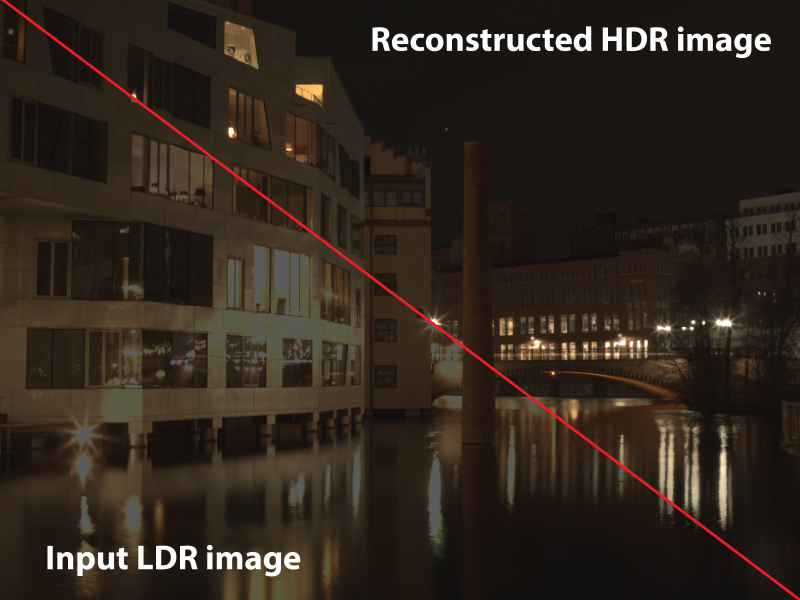

Deep Reverse Tone Mapping

Summary: We propose the first deep-learning-based approach for inferring a high dynamic range (HDR) image from a single low dynamic range (LDR) input.

Author(s): Yuki Endo, University of Tsukuba

Yoshihiro Kanamori, University of Tsukuba

Jun Mitani, University of Tsukuba

Speaker(s): Yuki Endo; Yoshihiro Kanamori, University of Tsukuba

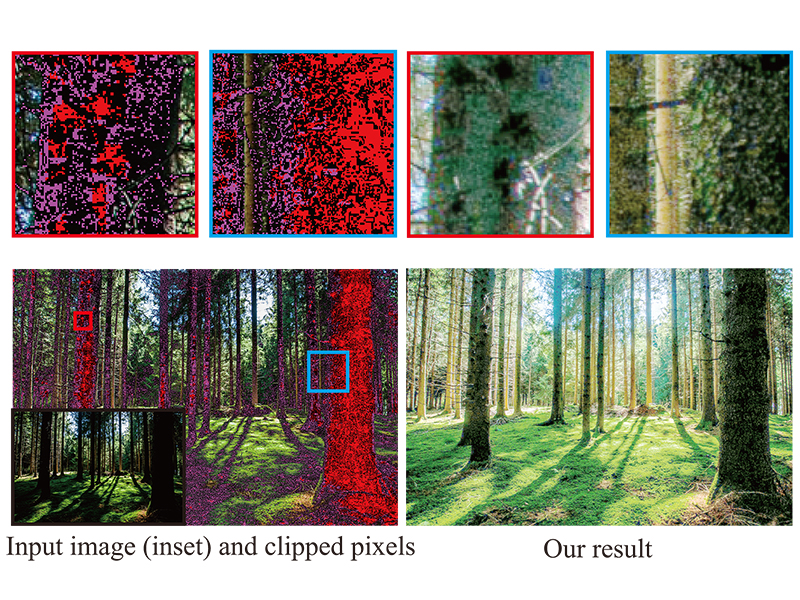

HDR Image Reconstruction from a Single Exposure using Deep CNNs

Summary: We propose a deep learning HDR reconstruction method, that enables reconstruction of saturated image regions in single-exposure LDR images using a hybrid dynamic range autoencoder. The method can predict visually convincing high resolution HDR images in a wide range of situations, and from a wide range of cameras.

Author(s): Gabriel Eilertsen, Linköping University

Joel Kronander, Linköping University

Gyuri Denes, University of Cambridge

Rafal Mantiuk, University of Cambridge

Jonas Unger, Linköping University

Speaker(s): Gabriel Eilertsen, Linköping University, Sweden

Transferring Image-based Edits for Multi-Channel Compositing

Summary: Image-based edits are commonly applied to multi-channel renderings of 3D scenes. Unfortunately, such edits cannot be easily reused for global variations of the original scene. We propose a method to automatically transfer such user edits across variations of object geometry, illumination, and viewpoint.

Author(s): James Hennessey, University College London, University College London

Wilmot Li, Adobe Research

Bryan Russell, Adobe Research

Eli Shechtman, Adobe Research

Niloy Mitra, University College London

Speaker(s): James Hennessey, University College London

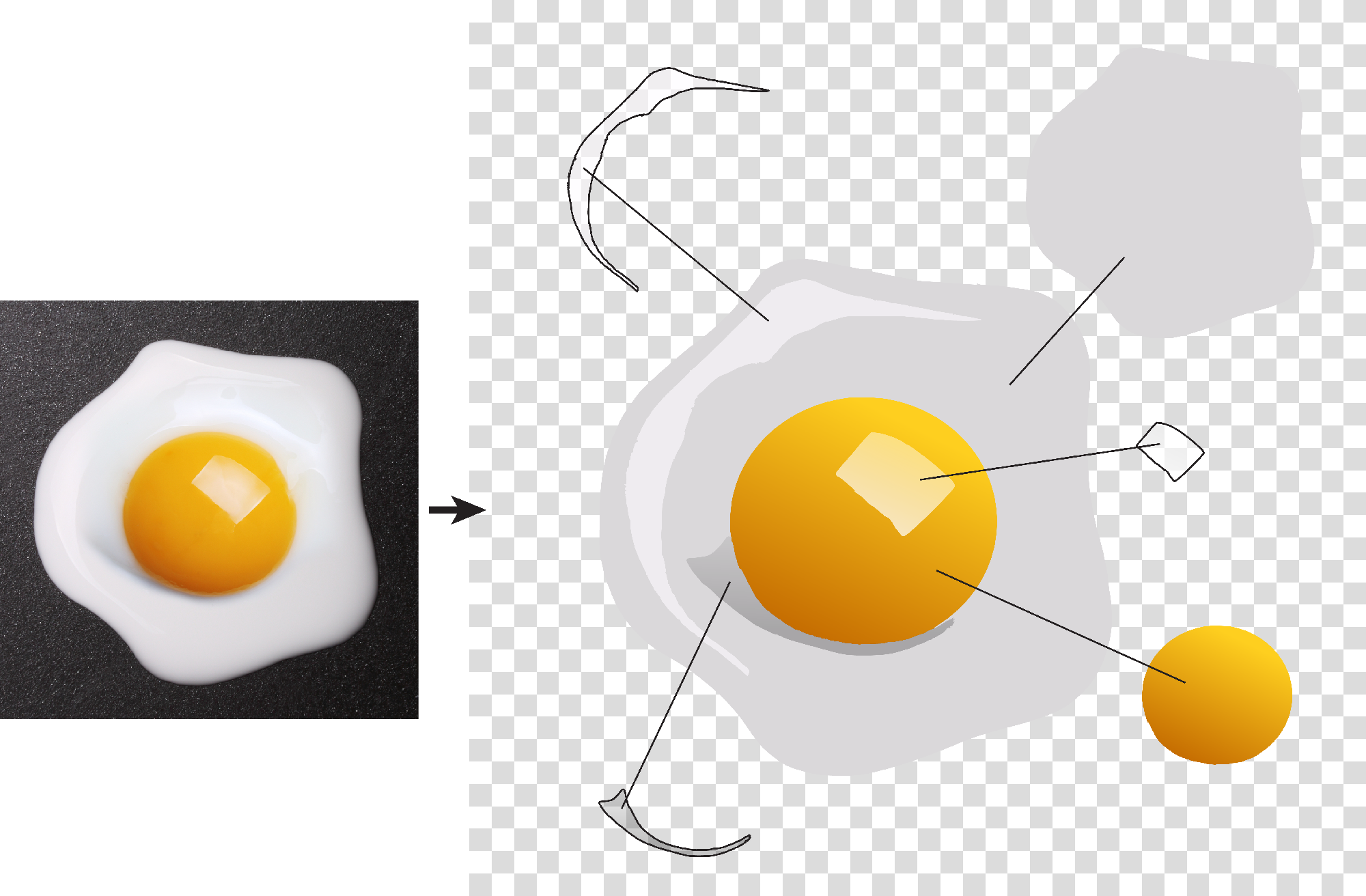

Photo2ClipArt: Image Abstraction and Vectorization Using Layered Linear Gradients

Summary: A method to create vector cliparts from photographs. The method decomposes a bitmap photograph into a stack of layers, each layer containing a vector path filled with a linear color gradient. The resulting decomposition is easy to edit and reproduces the clean and simplified look of cliparts.

Author(s): Jean Dominique Favreau, Inria, Université Côte d'Azur

Florent Lafarge, Inria, Université Côte d'Azur

Adrien Bousseau, Inria, Université Côte d'Azur, INRIA

Speaker(s): Jean Dominique Favreau, Inria - Université Cote d'Azur