siggraph

siggraph

siggraph

siggraph

Images and Displays

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 28 November 2017, 09:00am - 10:45am

Venue: Amber 1

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Min H. Kim, Korea Advanced Institute of Science and Technology (KAIST), South Korea

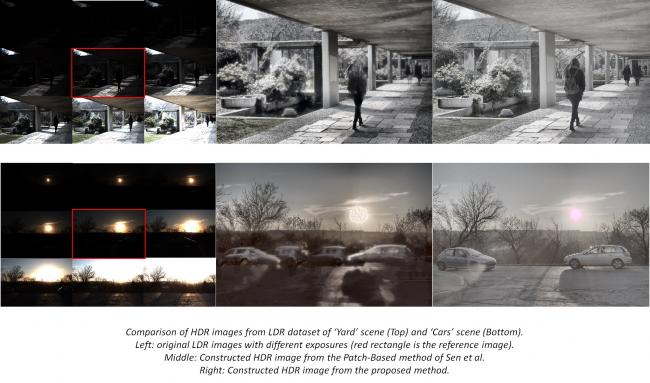

Removal of Ghosting Artefacts in HDRI using Intensity Scaling Cue

Summary: Motion artefacts (ghosting) can be produced in HDR images due to moving objects in the input LDR dataset. This work aligns all LDR images with a reference image, and thus removes ghost artefacts in the HDR image. This is done without explicit detection of moving regions in LDR images. Intensity scaling factor between the reference image and target image is estimated by taking into account only non-moving and non-saturated pixels, and it is used as an index to determine moving pixels in target image. Finally, the values of the moving pixels in target image are corrected with their expected values. The method is more accurate than the existing methods with competitive computation speed.

Author(s): Seong-O Shim, University of Jeddah

Ishtiaq Rasool Khan, University of Jeddah

Speaker(s): Seong-O Shim, Faculty of Computing and IT, University of Jeddah, Saudi Arabia

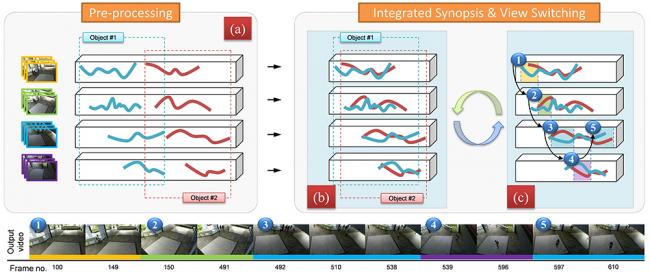

Multi-video Object Synopsis Integrating Optimal View Switching

Summary: In this paper, we propose to integrate multi-video object synopsis with the display method of optimal multi-view switching. We group occurrences of the same object in different cameras together and operate them in the same way, based on which we propose a unified framework that optimizes object-based synopsis variables and multi-view switching variables simultaneously. The unified formulation encodes activity, occlusion, chronological order, and view switching costs. We develop an alternative optimization scheme composed of graph cuts and dynamic programming to minimize the combined costs efficiently. This integrated framework provides a more user convenient browsing approach for object-based synopsis results and allows to further reduce occlusion artifacts between synopsized objects. Experiments demonstrate the effectiveness and user convenience of our multi-video synopsis method.

Author(s): Zhensong Zhang, The Chinese University of Hong Kong, CUHK

Yongwei Nie, South China University of Technology

Hanqiu Sun, The Chinese University of Hong Kong

Qiuxia Lai, The Chinese University of Hong Kong

Guiqing Li, South China University of Technology

Speaker(s): Zhensong Zhang, The Chinese University of Hong Kong

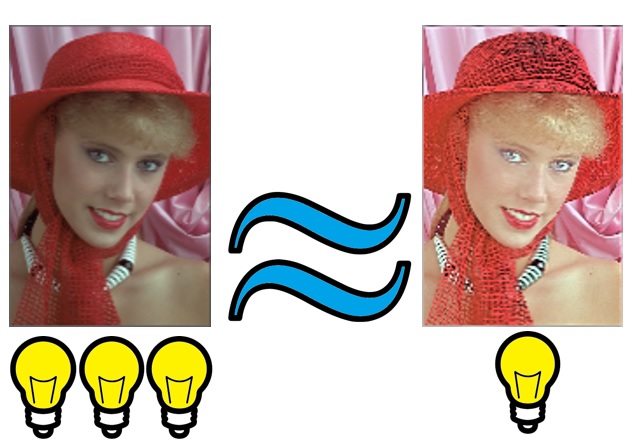

Visual Enhancement via Reinforcement Parameter Learning for Low Backlighted Display

Summary: In this technical brief, we propose a system to enhance the image on low backlighted display in order to save electrical power for mobile devices. In addition to our brightness compensation,we also take human visual perception, such as just-noticeable difference (JND) and saliency, into account. To integrate these characteristics in a system, we need to adjust several parameters. Accordingly, we introduce our reinforcement parameter learning into the system. By taking actions and analyzing rewards, we can train these characteristic parameters off-line and test the image online. Experimental results show that our visual enhancement via reinforcement parameter learning outperforms the existing systems.

Author(s): Chih-Tsung Shen, Industrial Technology Research Institute

Ching-Hao Lai, Industrial Technology Research Institute

Yi-Ping Hung, Department of Computer Science and Information Engineering, National Taiwan University

Soo-Chang Pei, National Taiwan University

Speaker(s): Chih-Tsung Shen, Industrial Technology Research Institute

GPU Based Techniques for Deep Image Merging

Summary: We explore GPU based merging of deep images using different memory layouts for fragment lists - linked lists, linearised arrays, and a new interleaved arrays approach. We also report performance improvements for merging techniques which leverage GPU memory hierarchy and layout by processing blocks of fragment data using fast registers based on similar techniques used to improve performance of transparency rendering. Our results show a 2-5X improvement from combining these techniques.

Author(s): Jesse Archer, RMIT University

Geoff Leach, RMIT University

Ron van Schyndel, RMIT University

Speaker(s): Jesse Archer, RMIT University