siggraph

siggraph

siggraph

siggraph

Textures and Color

Full Conference Pass

Full Conference Pass Full Conference 1-Day Pass

Full Conference 1-Day Pass

Date/Time: 30 November 2017, 02:15pm - 04:00pm

Venue: Amber 1

Location: Bangkok Int'l Trade & Exhibition Centre (BITEC)

Session Chair: Kaylan Sunkavalli, Adobe, USA

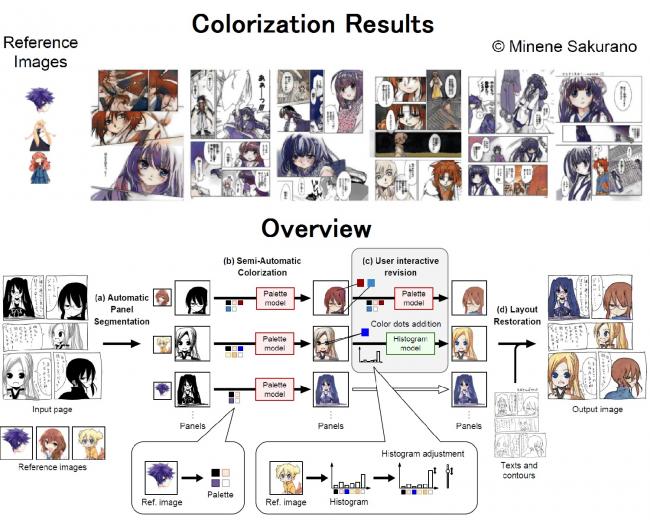

Comicolorization: Semi-Automatic Manga Colorization

Summary: We developed "Comicolorization", a semi-automatic colorization system for manga images. Given a monochrome manga and reference images as inputs, our system generates a plausible color version of the manga. This is the first work to address the colorization of an entire manga title (a set of manga pages). Our method colorizes a whole page (not a single panel) semi-automatically, with the same color for the same character across multiple panels. To colorize the target character by the color from the reference image, we extract a color feature from the reference and feed it to the colorization network to help the colorization. Our approach employs adversarial loss to encourage the effect of the color features. Optionally, our tool allows users to revise the colorization result interactively. By feeding the color features to our deep colorization network, we accomplish colorization of the entire manga using the desired colors for each panel.

Author(s): Chie Furusawa, Dwango Co., ltd.

Kazuyuki Hiroshiba, Dwango Co., ltd.

Keisuke Ogaki, Dwango Co., ltd.

Yuri Odagiri, Dwango Co., ltd.

Speaker(s): Chie Furusawa Kazuyuki Hiroshiba Keisuke Ogaki, DWANGO Co., Ltd.

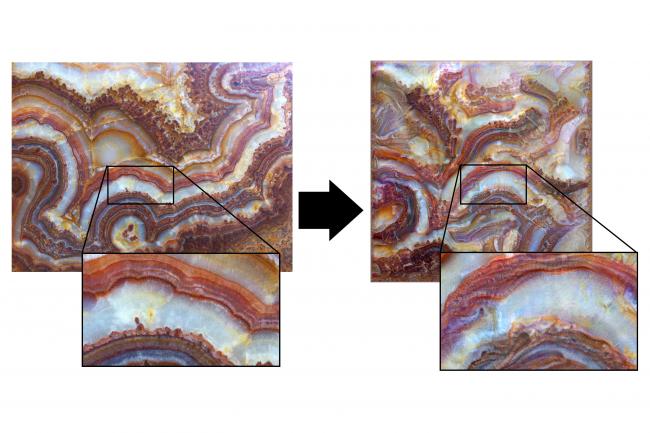

High-Resolution Multi-Scale Neural Texture Synthesis

Summary: CNN-based texture synthesis has shown much promise since the publication of the "Neural Algorithm of Artistic Style" by Gatys et al. in 2015. These techniques fail when input images are of high resolution, as texture features are large relative to the receptive fields of the neurons in the CNN. By using a Gaussian pyramid image representation and looking at feature covariances for each image scale, we can generate significantly better quality results.

Author(s): Xavier Snelgrove, Independent Researcher

Speaker(s): Xavier Snelgrove, Generative Poetics

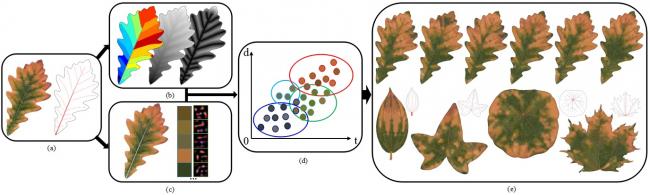

Structure-guided Texturing with Embedded Randomness

Summary: In the wild, there are a variety of phenomenon consisting of similar individual elements which involve textures guided by corresponding global structures, \eg leaves, petals and butterfly wings. The element diversity directly affects the realism of virtual scenes involving such phenomenon. We propose an approach to generate diverse leaf instances from single input example by fully discovering the embedded randomness. A global structure-guided texture representation is introduced to describe leaf texture variation with respect to leaf venation structure using simple biological knowledge. Further, we design an intuitive synthesis algorithm to generate diverse leaf instances from single leaf example. Experiments show that our synthesis algorithm can produce diverse leaf instances with plausible random variations for leaf venations from species which is the same or different from example.

Author(s): Yinling Qian, The Chinese University of Hong Kong

Yanyun Chen, Institute of Software, Chinese Academy of Sciences

Hanqiu Sun, The Chinese University of Hong Kong

Jian Shi, Institute of Software, Chinese Academy of Sciences

Lei Ma, Institute of Software, Chinese Academy of Sciences

Speaker(s): Hanqiu Sun, The Chinese University of Hong Kong

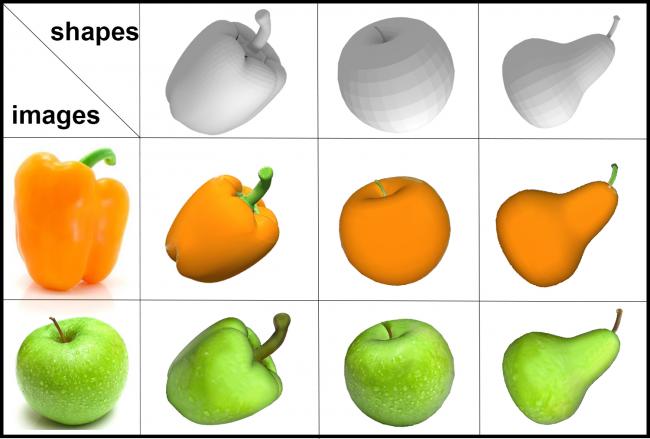

Auto-colorization of 3D models from Images

Summary: Color is crucial to achieve more realism and better visual perception. However, majority of existing 3D model repositories are colorless. In this paper, we proposed an automatic scheme for 3D model colorization taking advantage of large availability of realistic 2D images with similar appearance. Specifically, we establish a region-based correspondence between 3D model and its 2D image counterpart. Then we employ a PatchMatch based approach to synthesize the texture images. Subsequently, we quilt the texture gaps via multi-view coverage. Finally, the texture coordinates are obtained by projecting back to the 3D model. Our method yields satisfactory results in most situations even when there exists an inconsistency between the 3D model and the given image. In the results, we present a cross-over experiment that validates the effectiveness and generality of our method.

Author(s): Juncheng Liu, Peking University

Zhouhui Lian, Peking University

Jianguo Xiao, Peking University

Speaker(s): Yue Jiang, Peking University